February 02, 2023 — Posted by Scott Main, Technical Writer, and the Coral team In just a few years, ML models for mobile and embedded systems have come a very long way. With TensorFlow Lite (TFLite), you can now run sophisticated models that perform pose estimation and object segmentation, but these models still require a relatively powerful processor and a high-level OS in a mobile device or small computer like a R…

Posted by Scott Main, Technical Writer, and the Coral team

In just a few years, ML models for mobile and embedded systems have come a very long way. With TensorFlow Lite (TFLite), you can now run sophisticated models that perform pose estimation and object segmentation, but these models still require a relatively powerful processor and a high-level OS in a mobile device or small computer like a Raspberry Pi. Alternatively, you can use TensorFlow Lite Micro (TFLM) on low-power microcontrollers (MCUs) to run simple models such as image and audio classification. However, the models for MCUs are much smaller, so they have limited capabilities and accuracy.

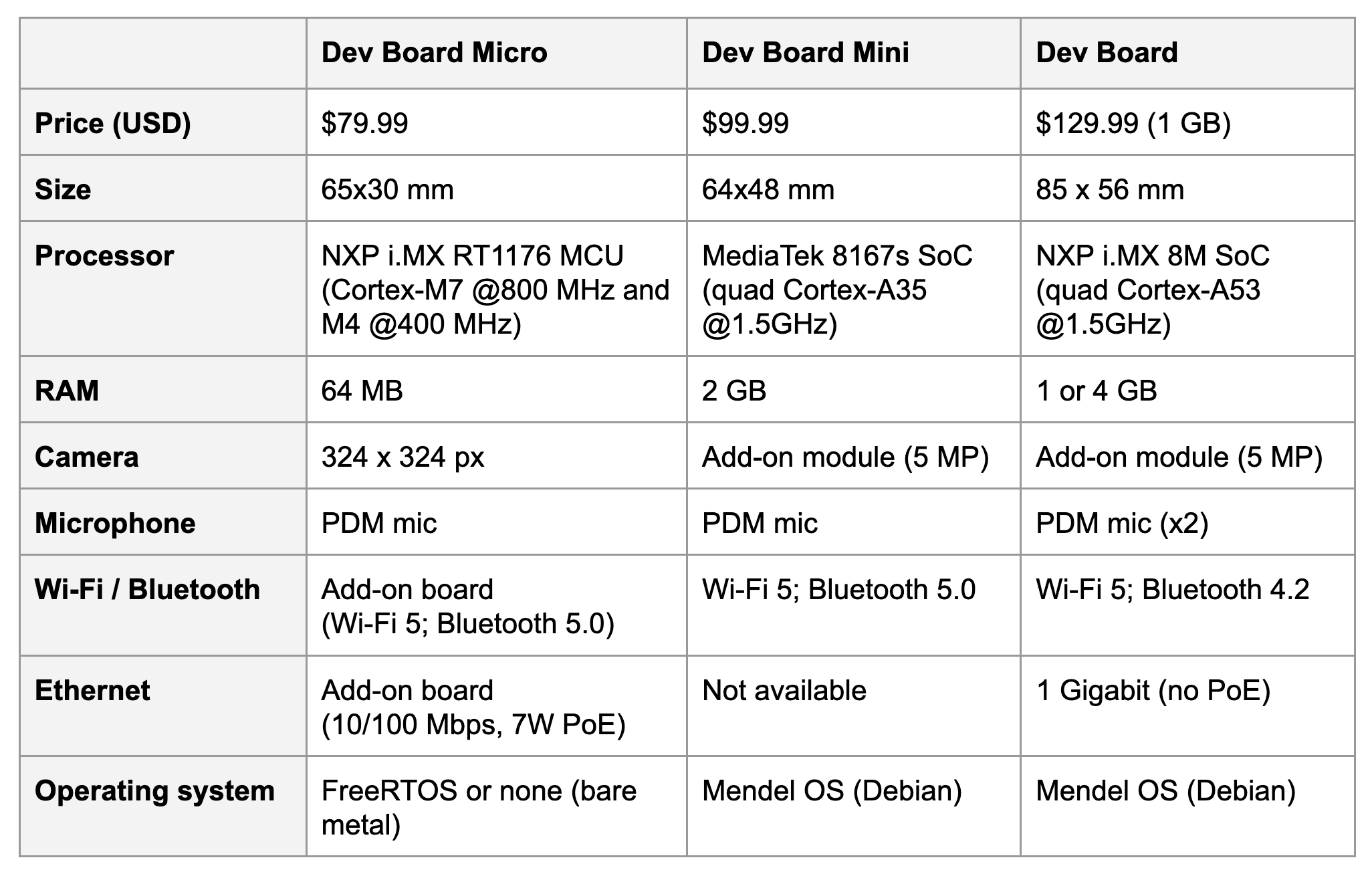

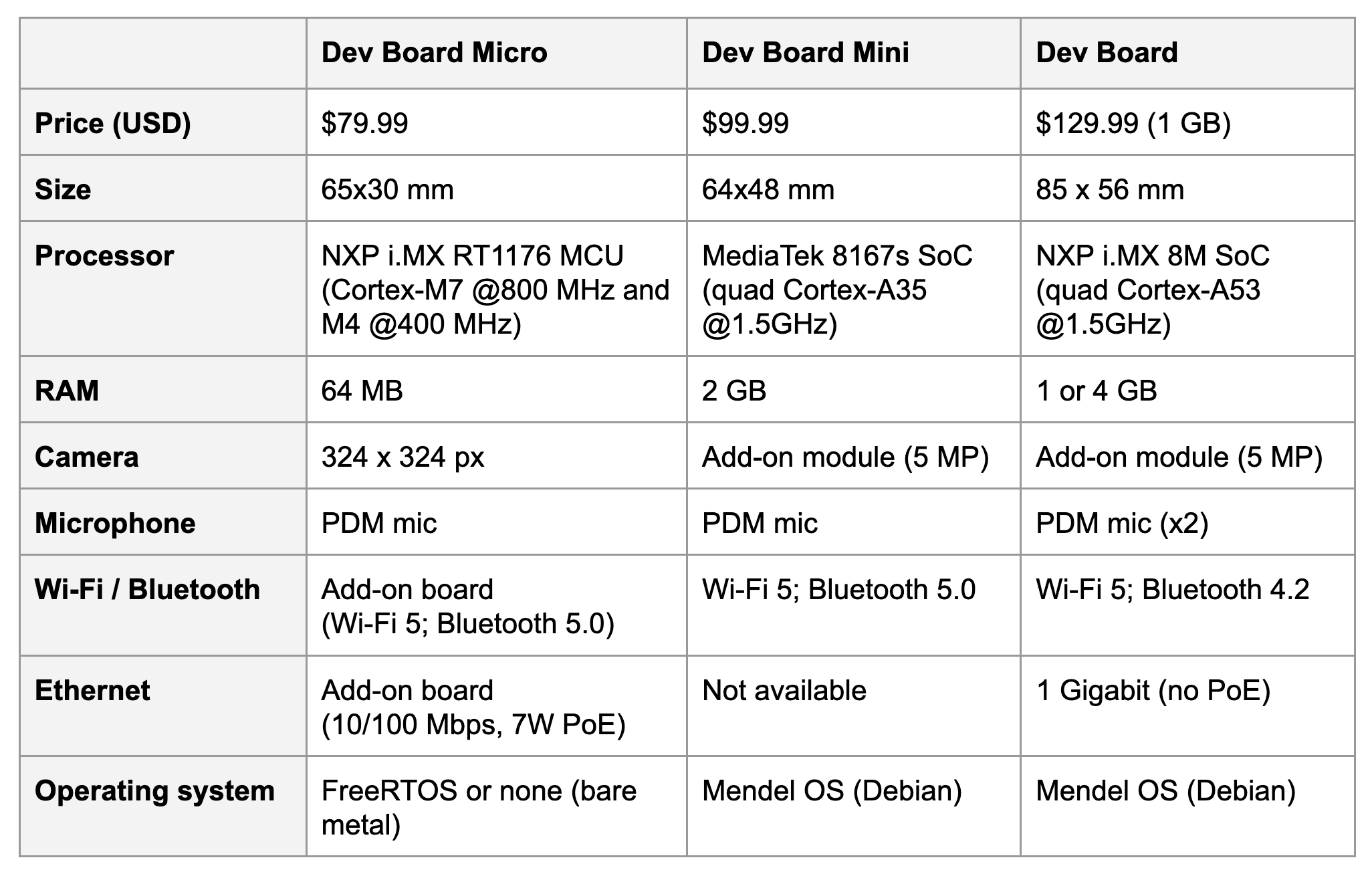

So there's an opportunity cost when you must select between TFLM (low power but limited model performance) and regular TFLite (great model performance but higher power cost). Wouldn't it be nice if you could get both on one board? Well, we're happy to announce that the Coral Dev Board Micro is now available to provide exactly that.

The Dev Board Micro is a microcontroller board (with a dual-core Cortex-M7 and Cortex-M4), so it's small and power efficient, but it also includes the Coral Edge TPU™ on board, so it offers outstanding inferencing speeds for larger TFLite models. Plus, it has an on-board camera (324x324) and microphone. Naturally, there are plenty of GPIO pins and high-density connectors for add-on boards (such as our own Wireless Add-on and PoE Add-on).

|

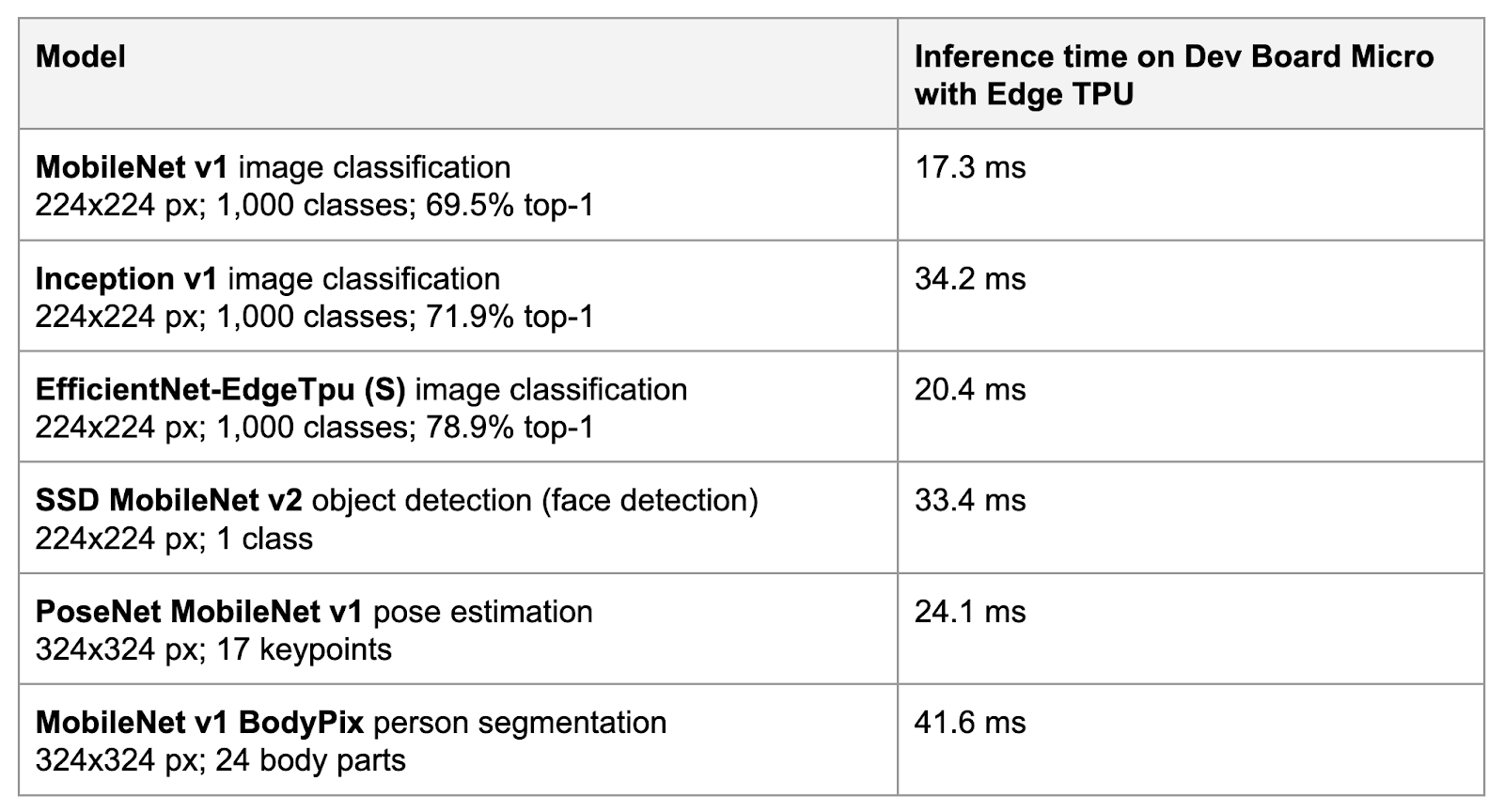

The Dev Board Micro executes your models using TFLM, which supports only a subset of operations in TFLite. Even if TFLM did support all the same ops, the MCU would still be much too slow for practical applications that use complex models such as for object detection and pose estimation. However, when you compile a TFLite model for the Edge TPU, all the MCU needs to do is set the model's input, delegate the model ops to the Edge TPU, and then read the output.

|

|

We built a new platform for the Dev Board Micro based on FreeRTOS and included compatibility with the Arduino programming language. So you can build a C++ app with CMake and flash it to the board with our command line tools, or you can write and upload an Arduino sketch with the Arduino IDE. We call this new platform coralmicro and it's fully open sourced on GitHub.

If you choose to code with FreeRTOS, coralmicro includes all the core FreeRTOS APIs you need to build multi-tasking apps on the MCU, plus custom coralmicro APIs for interacting with GPIOs, capturing photos, listening to audio, performing multi-core processing, and much more.

Because coralmicro uses TensorFlow Lite for Microcontrollers for inferencing, running a TensorFlow Lite model on the Dev Board Micro works almost exactly the way you expect, if you've used TensorFlow Lite on other platforms. One difference with TFLM, compared to TFLite, is that you need to specify the ops used by your model by adding them to the MicroMutableOpResolver. For example, if your model uses 2D convolution, then you need to call AddConv2D(). This way, you conserve memory by compiling only the op kernels you actually need to run your model on the MCU. However, if your model is compiled to run on the Edge TPU, then you also need to add the Edge TPU custom op, which accounts for all the ops that run on the Edge TPU. For example, when using SSD MobileNet for object detection on the Edge TPU, only the dequantize and post-processing ops run on the MCU, and the rest are delegated to the Edge TPU custom op, so the code to set up the MicroInterpreter looks like this:

auto tpu_context = coralmicro::EdgeTpuManager::GetSingleton()->OpenDevice();

if (!tpu_context) {

printf("ERROR: Failed to get EdgeTpu context\r\n");

vTaskSuspend(nullptr);

}

tflite::MicroErrorReporter error_reporter;

tflite::MicroMutableOpResolver<3> resolver;

resolver.AddDequantize();

resolver.AddDetectionPostprocess();

resolver.AddCustom(coralmicro::kCustomOp, coralmicro::RegisterCustomOp());

tflite::MicroInterpreter interpreter(tflite::GetModel(model.data()), resolver,

tensor_arena, kTensorArenaSize,

&error_reporter);

Notice that you also need to turn on the Edge TPU with OpenDevice(). Other than that and AddCustom(), the code to run an inference on the Dev Board Micro is pretty standard TensorFlow code. For more details, see our API reference for TFLM, and check out our code examples for FreeRTOS.

If you prefer to code with the Arduino IDE, we offer Arduino-style APIs for most of the same features available in FreeRTOS (multi-core processing is not available in Arduino). All you need to do is install the "Coral" boards package in the Arduino IDE's Board Manager, select the Dev Board Micro board, and then you can browse all our examples for the Dev Board Micro in File > Examples.

|

You can learn more about the board and find a seller here, and start running the code examples by following our get started guide.

February 02, 2023 — Posted by Scott Main, Technical Writer, and the Coral team In just a few years, ML models for mobile and embedded systems have come a very long way. With TensorFlow Lite (TFLite), you can now run sophisticated models that perform pose estimation and object segmentation, but these models still require a relatively powerful processor and a high-level OS in a mobile device or small computer like a R…