d’agost 15, 2018 —

Posted by Sara Robinson, Developer Advocate

We often see transfer learning applied to computer vision models, but what about using it for text classification? Enter TensorFlow Hub, a library for enhancing your TF models with transfer learning. Transfer learning is the process of taking the weights and variables of a pre-existing model that has already been trained on lots of data and leveraging i…

import numpy as np

import pandas as pd

import tensorflow as tf

import tensorflow_hub as hub

from sklearn.preprocessing import MultiLabelBinarizer!wget 'https://storage.googleapis.com/movies_data/movies_metadata.csv'

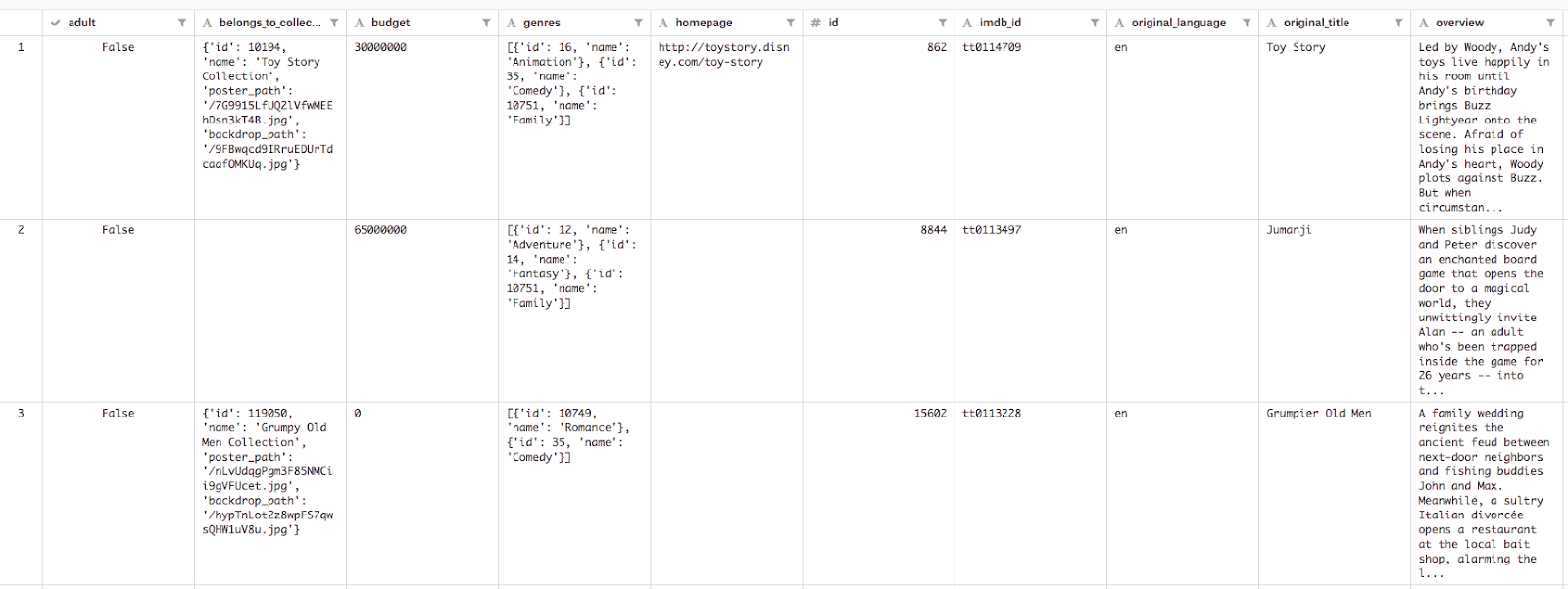

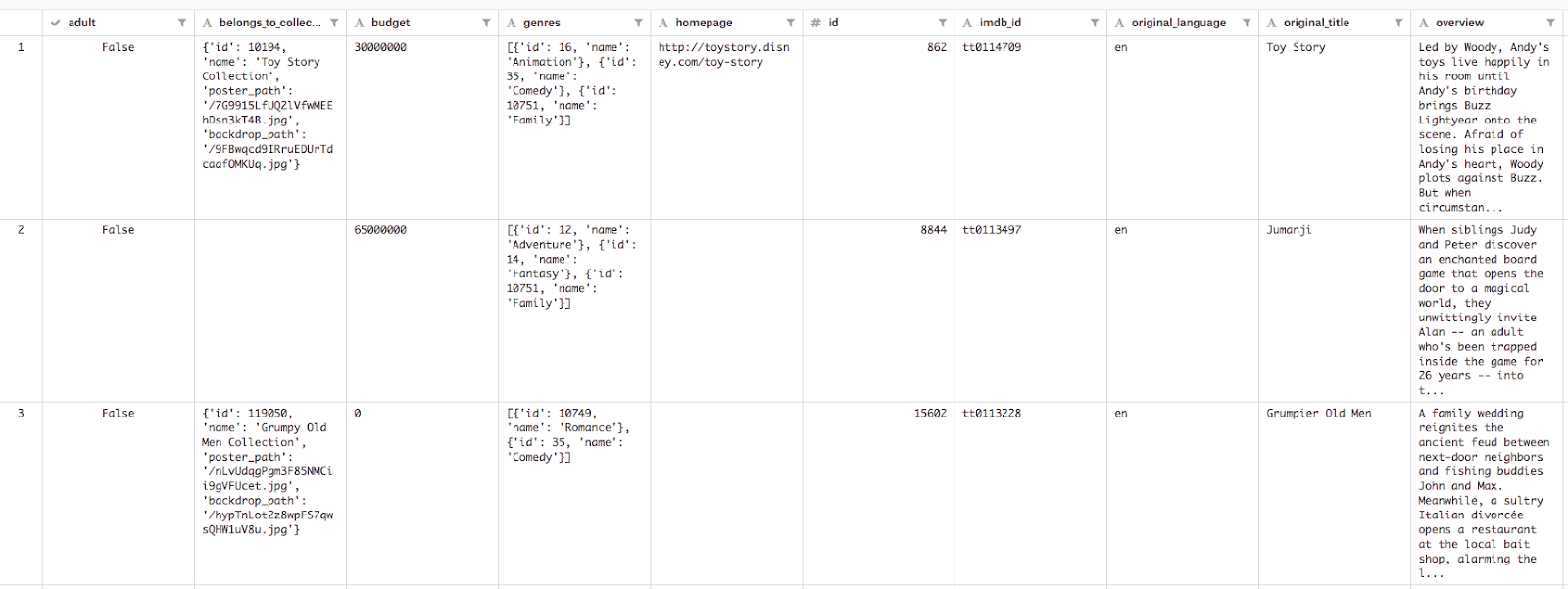

data = pd.read_csv('movies_metadata.csv')

descriptions = data['overview']

genres = data['genres']top_genres = ['Comedy', 'Thriller', 'Romance', 'Action', 'Horror', 'Crime', 'Documentary', 'Adventure', 'Science Fiction']train_size = int(len(descriptions) * .8)

train_descriptions = descriptions[:train_size]

train_genres = genres[:train_size]

test_descriptions = descriptions[train_size:]

test_genres = genres[train_size:]hub.text_embedding_column to create a feature column for this layer in one line of code, passing it the name of our layer (“movie_descriptions”) and the URL of the TF Hub model we’ll be using:

description_embeddings = hub.text_embedding_column(

"movie_descriptions",

module_spec="https://tfhub.dev/google/universal-sentence-encoder/2"

)['Action', 'Adventure']). Since each label needs to be the same length, we’ll transform these lists into multi-hot vectors of 1s and 0s corresponding to the genres present in a particular description. The multi-hot vector for an Action and Adventure movie would look like the following:

# Genre lookup, each genre corresponds to an index

top_genres = ['Comedy', 'Thriller', 'Romance', 'Action', 'Horror', 'Crime', 'Documentary', 'Adventure', 'Science Fiction']

# Multi-hot label for an action and adventure movie

[0 0 0 1 0 0 0 1 0]MultiLabelBinarizer:

encoder = MultiLabelBinarizer()

encoder.fit_transform(train_genres)

train_encoded = encoder.transform(train_genres)

test_encoded = encoder.transform(test_genres)

num_classes = len(encoder.classes_)encoder.classes_ to see a list of all the string classes your model is predicting.

head, and defines the type of labels our model should expect. Since we want our model to output multiple labels, we’ll use multi_label_head here:

multi_label_head = tf.contrib.estimator.multi_label_head(

num_classes,

loss_reduction=tf.losses.Reduction.SUM_OVER_BATCH_SIZE

)DNNEstimator. The hidden_units param indicates how many layers we’ll have in our network. This model has 2 layers, the first has 64 neurons and the second has 10. Number of layers and layer size is a hyperparameter so you should try out different values to see what works best for your dataset. Finally, we pass our feature columns to the Estimator. In this case we only have one (the description), and we’ve already defined it as a TF Hub embedding column above so we can pass that here as a list:

estimator = tf.contrib.estimator.DNNEstimator(

head=multi_label_head,

hidden_units=[64,10],

feature_columns=[description_embeddings]

)numpy_input_fn and feed our data to our model as numpy arrays:

# Format our data for the numpy_input_fn

features = {

"descriptions": np.array(train_descriptions)

}

labels = np.array(train_encoded)

train_input_fn = tf.estimator.inputs.numpy_input_fn(

features,

labels,

shuffle=True,

batch_size=32,

num_epochs=20

)batch_size and num_epochs parameters in our input function are both hyperparameters. batch_size tells our model how many examples will be passed to our model during one iteration, and num_epochs is the number of times our model will go through the entire training set.

estimator.train(input_fn=train_input_fn)estimator.evaluate():

eval_input_fn = tf.estimator.inputs.numpy_input_fn({"descriptions": np.array(test_descriptions).astype(np.str)}, test_encoded.astype(np.int32), shuffle=False)

estimator.evaluate(input_fn=eval_input_fn)raw_test = [

"An examination of our dietary choices and the food we put in our bodies. Based on Jonathan Safran Foer's memoir.", # Documentary

"A teenager tries to survive the last week of her disastrous eighth-grade year before leaving to start high school.", # Comedy

"Ethan Hunt and his IMF team, along with some familiar allies, race against time after a mission gone wrong." # Action, Adventure

]predict():

predict_input_fn = tf.estimator.inputs.numpy_input_fn({"descriptions": np.array(raw_test).astype(np.str)}, shuffle=False)

results = estimator.predict(predict_input_fn)for movie_genres in results:

top_2 = movie_genres['probabilities'].argsort()[-2:][::-1]

for genre in top_2:

text_genre = encoder.classes_[genre]

print(text_genre + ': ' + str(round(movie_genres['probabilities'][genre] * 100, 2)) + '%')

d’agost 15, 2018

—

Posted by Sara Robinson, Developer Advocate

We often see transfer learning applied to computer vision models, but what about using it for text classification? Enter TensorFlow Hub, a library for enhancing your TF models with transfer learning. Transfer learning is the process of taking the weights and variables of a pre-existing model that has already been trained on lots of data and leveraging i…