https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEih3dh8TfcreBO1iYiVYsVl0KyhxL_Lv77mGLB6xmLDXd4eFd-l9Az9Jx-U2mZRXopNOtJoRGYX8fFk_XdTSGjfVsKKat9erGMcIjOQ-G4ERedqcv7-z4urSJS-0XZrp1SUszOmi7U-GHc/s1600/model.png

Posted by the TensorFlow Team

Thanks to an incredible and diverse community, TensorFlow has grown to become one of

the most loved and

widely adopted ML platforms in the world. This community includes:

In November, TensorFlow

celebrated its 3rd birthday with a look back at the features added throughout the years. We’re excited about another major milestone, TensorFlow 2.0. TensorFlow 2.0 will focus on simplicity and ease of use, featuring updates like:

- Easy model building with Keras and eager execution.

- Robust model deployment in production on any platform.

- Powerful experimentation for research.

- Simplifying the API by cleaning up deprecated APIs and reducing duplication.

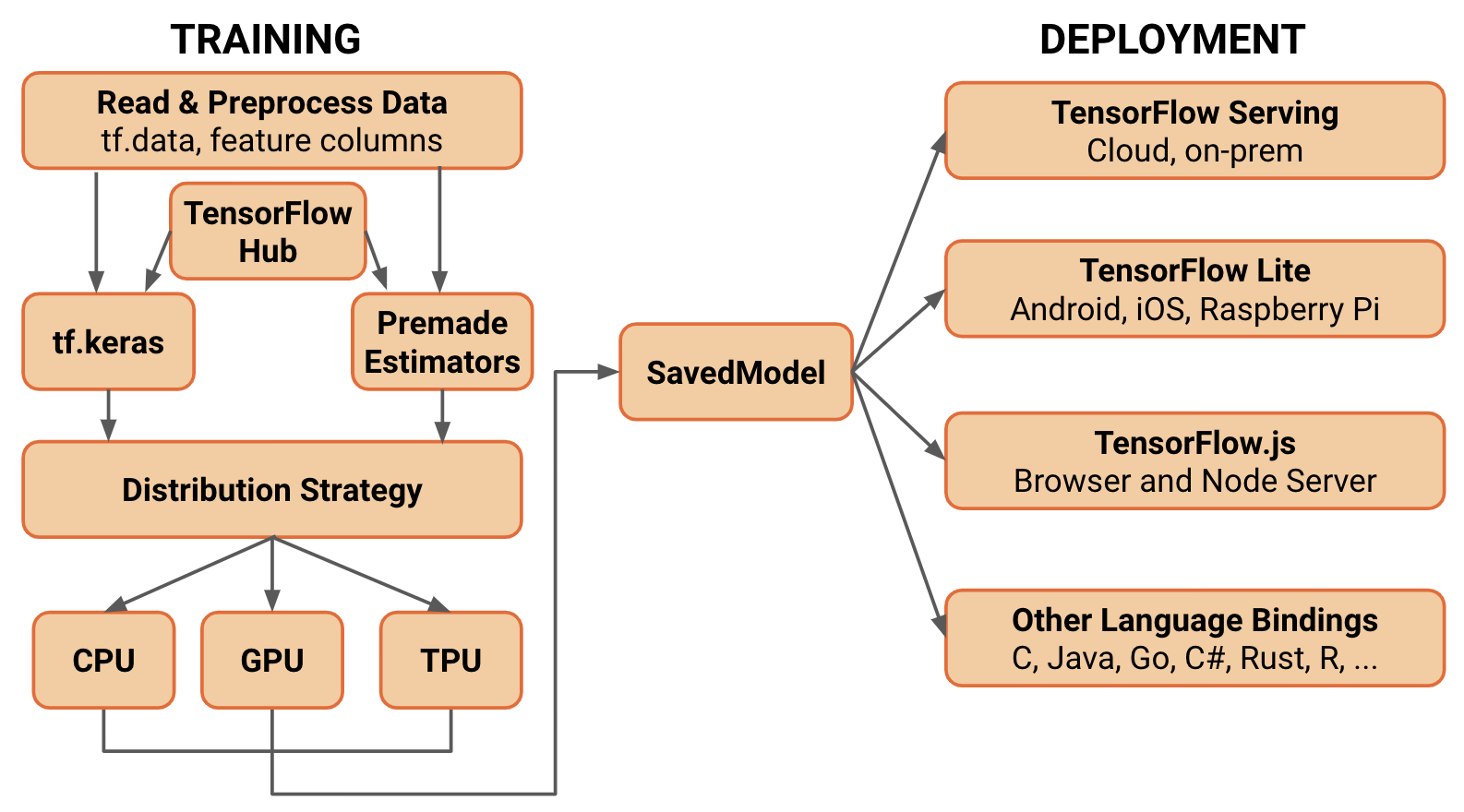

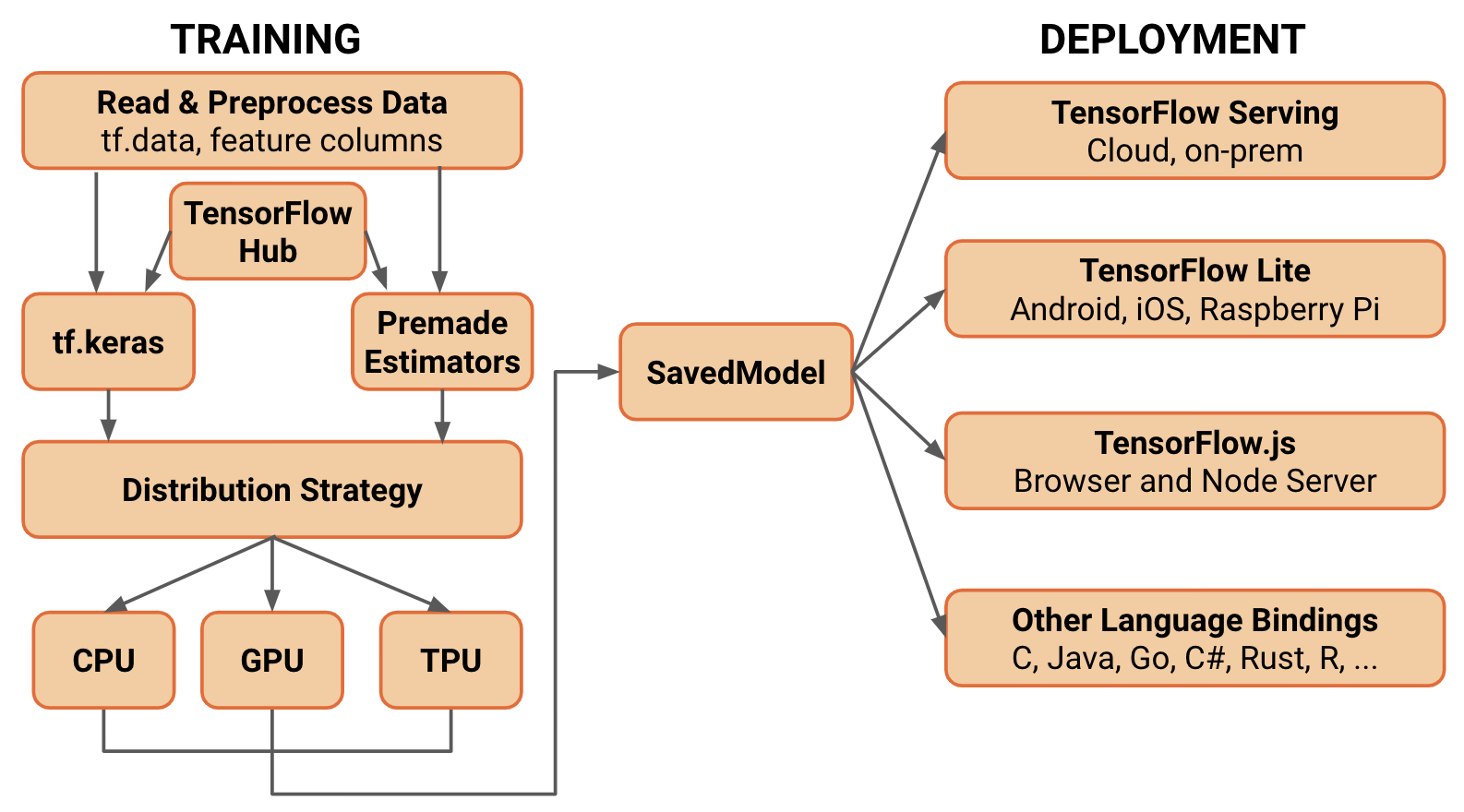

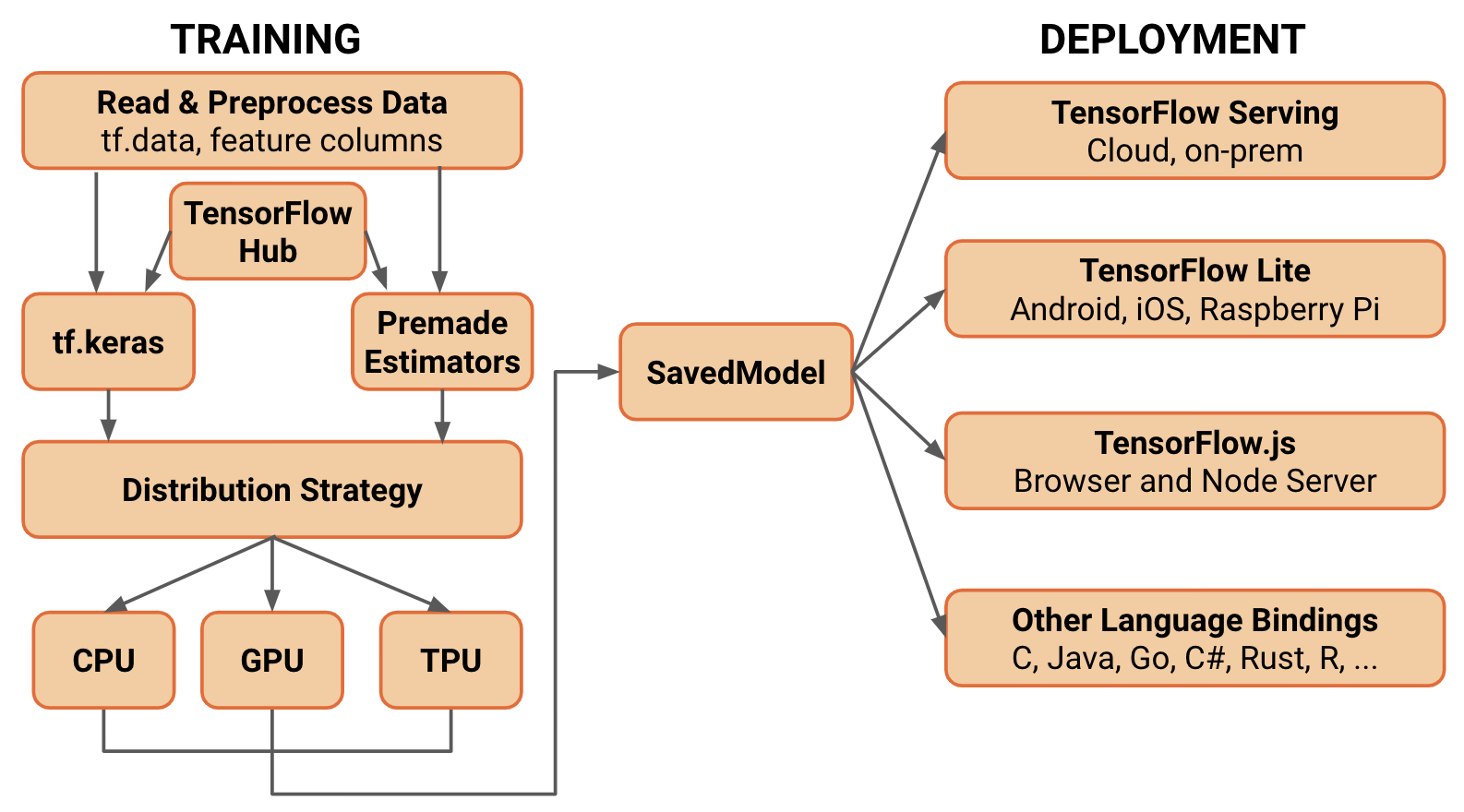

Over the last few years, we’ve added a number of components to TensorFlow. With TensorFlow 2.0, these will be packaged together into a comprehensive platform that supports machine learning workflows from training through deployment. Let’s take a look at the new architecture of TensorFlow 2.0 using a simplified, conceptual diagram as shown below:

|

| Note: Although the training part of this diagram focuses on the Python API, TensorFlow.js also supports training models. Other language bindings also exist with various degrees of support, including: Swift, R, and Julia. |

Easy model building

In a

recent blog post we announced that

Keras, a user-friendly API standard for machine learning, will be the central high-level API used to build and train models. The Keras API makes it easy to get started with TensorFlow. Importantly, Keras provides several model-building APIs (Sequential, Functional, and Subclassing), so you can choose the right level of abstraction for your project. TensorFlow’s implementation contains enhancements including eager execution, for immediate iteration and intuitive debugging, and tf.data, for building scalable input pipelines.

Here’s an example workflow (in the coming months, we will be working to update the guides linked below):

- Load your data using tf.data. Training data is read using input pipelines which are created using

tf.data. Feature characteristics, for example bucketing and feature crosses are described using tf.feature_column. Convenient input from in-memory data (for example, NumPy) is also supported.

- Build, train and validate your model with tf.keras, or use Premade Estimators. Keras integrates tightly with the rest of TensorFlow so you can access TensorFlow’s features whenever you want. A set of standard packaged models (for example, linear or logistic regression, gradient boosted trees, random forests) are also available to use directly (implemented using the

tf.estimator API). If you’re not looking to train a model from scratch, you’ll soon be able to use transfer learning to train a Keras or Estimator model using modules from TensorFlow Hub.

- Run and debug with eager execution, then use tf.function for the benefits of graphs. TensorFlow 2.0 runs with eager execution by default for ease of use and smooth debugging. Additionally, the

tf.function annotation transparently translates your Python programs into TensorFlow graphs. This process retains all the advantages of 1.x TensorFlow graph-based execution: Performance optimizations, remote execution and the ability to serialize, export and deploy easily, while adding the flexibility and ease of use of expressing programs in simple Python.

- Use Distribution Strategies for distributed training. For large ML training tasks, the Distribution Strategy API makes it easy to distribute and train models on different hardware configurations without changing the model definition. Since TensorFlow provides support for a range of hardware accelerators like CPUs, GPUs, and TPUs, you can enable training workloads to be distributed to single-node/multi-accelerator as well as multi-node/multi-accelerator configurations, including TPU Pods. Although this API supports a variety of cluster configurations, templates to deploy training on Kubernetes clusters in on-prem or cloud environments are provided.

- Export to SavedModel. TensorFlow will standardize on SavedModel as an interchange format for TensorFlow Serving, TensorFlow Lite, TensorFlow.js, TensorFlow Hub, and more.

Robust model deployment in production on any platform

TensorFlow has always provided a direct path to production. Whether it’s on servers, edge devices, or the web, TensorFlow lets you train and deploy your model easily, no matter what language or platform you use. In TensorFlow 2.0, we’re improving compatibility and parity across platforms and components by standardizing exchange formats and aligning APIs.

Once you’ve trained and saved your model, you can execute it directly in your application or serve it using one of the deployment libraries:

- TensorFlow Serving: A TensorFlow library allowing models to be served over HTTP/REST or gRPC/Protocol Buffers.

- TensorFlow Lite: TensorFlow’s lightweight solution for mobile and embedded devices provides the capability to deploy models on Android, iOS and embedded systems like a Raspberry Pi and Edge TPUs.

- TensorFlow.js: Enables deploying models in JavaScript environments, such as in a web browser or server side through Node.js. TensorFlow.js also supports defining models in JavaScript and training directly in the web browser using a Keras-like API.

TensorFlow also has support for additional languages (some maintained by the broader community), including

C,

Java,

Go,

C#,

Rust,

Julia,

R, and others.

Powerful experimentation for research

TensorFlow makes it easy to take new ideas from concept to code, and from model to publication. TensorFlow 2.0 incorporates a number of features that enables the definition and training of state of the art models without sacrificing speed or performance:

- Keras Functional API and Model Subclassing API: Allows for creation of complex topologies including using residual layers, custom multi-input/-output models, and imperatively written forward passes.

- Custom Training Logic: Fine-grained control on gradient computations with

tf.GradientTape and tf.custom_gradient.

- And for even more flexibility and control, the low-level TensorFlow API is always available and working in conjunction with the higher level abstractions for fully customizable logic.

TensorFlow 2.0 brings several new additions that allow researchers and advanced users to experiment, using rich extensions like

Ragged Tensors,

TensorFlow Probability,

Tensor2Tensor, and more to be announced.

Along with these capabilities, TensorFlow provides eager execution for easy prototyping & debugging, Distribution Strategy API and AutoGraph to train at scale, and support for TPUs, making TensorFlow 2.0 an easy to use, customizable, and highly scalable platform for conducting state of the art ML research and translating that research into production pipelines.

Differences between TensorFlow 1.x and 2.0

There have been a number of versions and API iterations since we first open-sourced TensorFlow. With the rapid evolution of ML, the platform has grown enormously and now supports a diverse mix of users with a diverse mix of needs. With TensorFlow 2.0, we have an opportunity to clean up and modularize the platform based on

semantic versioning.

Here are some of the larger changes coming:

Additionally,

tf.contrib will be removed from the core TensorFlow repository and build process. TensorFlow’s contrib module has grown beyond what can be maintained and supported in a single repository. Larger projects are better maintained separately, while smaller extensions will graduate to the core TensorFlow code. A special interest group (SIG) has been formed to maintain and further develop some of the more important contrib projects going forward. Please

engage with this RFC if you are interested in contributing.

Compatibility and Continuity

To simplify the migration to TensorFlow 2.0, there will be a conversion tool which updates TensorFlow 1.x Python code to use TensorFlow 2.0 compatible APIs, or flags cases where code cannot be converted automatically.

Not all changes can be made completely automatically. For example, some deprecated APIs do not have a direct equivalent. That’s why we introduced the tensorflow.compat.v1 compatibility module, which retains support for the full TensorFlow 1.x API (excluding tf.contrib). This module will be maintained through the lifetime of TensorFlow 2.x and will allow code written with TensorFlow 1.x to remain functional.

Additionally, SavedModels or stored GraphDefs will be backwards compatible. SavedModels saved with 1.x will continue to load and execute in 2.x. However, the changes in 2.0 will mean that variable names in raw checkpoints may change, so using a pre-2.0 checkpoint with code that has been converted to 2.0 is not guaranteed to work. See the Effective TensorFlow 2.0 guide for more details.

We believe TensorFlow 2.0 will bring great benefits to the community, and we have invested significant efforts to make the conversion as easy as possible. However, we also recognize that migrating current pipelines will take time, and we deeply care about the current investment the community has made learning and using TensorFlow. We will provide 12 months of security patches to the last 1.x release, in order to give our existing users ample time to transition and get all the benefits of TensorFlow 2.0.

Timeline for TensorFlow 2.0

TensorFlow 2.0 will be available as a public preview early this year. But why wait? You can already develop the TensorFlow 2.0 way by using tf.keras and eager execution, pre-packaged models and the deployment libraries. The Distribution Strategy API is also already partly available today.

We’re very excited about TensorFlow 2.0 and the changes to come. TensorFlow has grown from a software library for deep learning to an entire ecosystem for all types of ML. TensorFlow 2.0 will be simple and easy to use for all users on all platforms.

Please consider joining the TensorFlow community to stay up-to-date and help make machine learning accessible to everyone!