https://blog.tensorflow.org/2019/03/build-ai-that-works-offline-with-coral.html?hl=sl

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjOlC-f33SuqziF77XYM-BbXUajKQQpVdNgx3NGZ1xEj4VwnYM-iW9rpXQ9_K6wWPQr4_EYOILJi3ZR7oSDNhkvIqdZPfhvpJkBB6jwplZjH20b4fzes-cVEUnIcRplMpIhj-vRE2e1mJo/s1600/coral.jpeg

Build AI that works offline with Coral Dev Board, Edge TPU, and TensorFlow Lite

Posted by Daniel Situnayake (@dansitu), Developer Advocate for TensorFlow Lite.

When you think about the hardware that powers machine learning, you might picture endless rows of power-hungry processors crunching terabytes of data in a distant server farm, or hefty desktop computers stuffed with banks of GPUs.

You’re unlikely to picture an integrated circuit, 40mm x 48mm, that sits neatly on a development board the size of a credit card. You definitely won’t picture a sleek aluminium box, the size of a toy car, hooked to your computer with a single USB-C.

These new devices are made by

Coral, Google’s new platform for enabling embedded developers to build amazing experiences with local AI. Coral’s first products are powered by Google’s

Edge TPU chip, and are purpose-built to run

TensorFlow Lite, TensorFlow’s lightweight solution for mobile and embedded devices. As a developer, you can use Coral devices to explore and prototype new applications for on-device machine learning inference.

Coral’s

Dev Board is a single-board Linux computer with a removable System-On-Module (SOM) hosting the Edge TPU. It allows you to prototype applications and then scale to production by including the SOM in your own devices. The Coral

USB Accelerator is a USB accessory that brings an Edge TPU to any compatible Linux computer. It’s designed to fit right on top of a Raspberry Pi Zero.

In this blog post, we’ll be exploring the exciting applications of machine learning “at the edge”, and we’ll learn how TensorFlow Lite and Coral can help you build AI into hardware products. You’ll see how our pre-trained models can get you up and running with no ML experience, and meet the conversion tools that help you optimize your own models for local use.

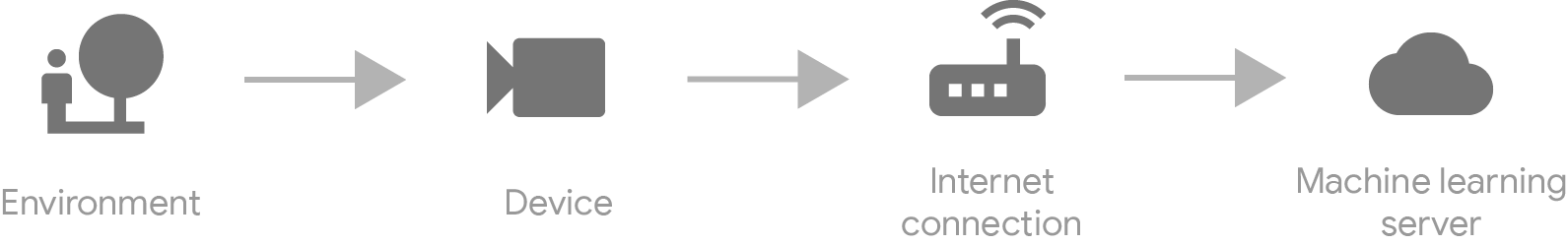

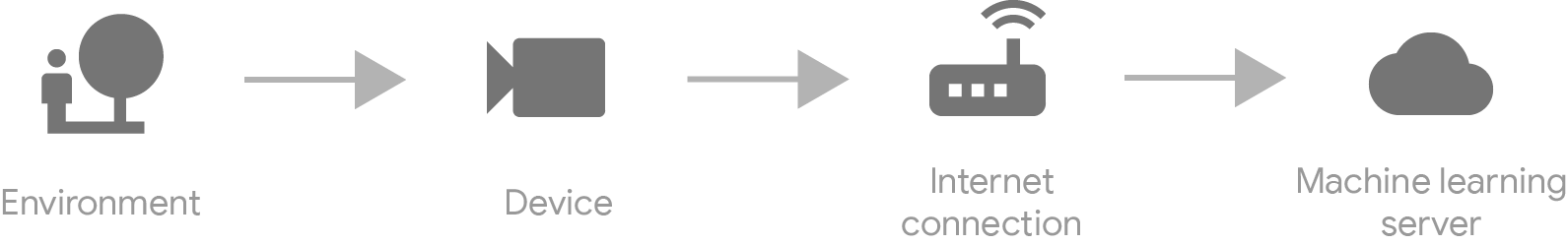

Inference at the edge

Until recently, deploying a machine learning model in production meant running it on some kind of server. If you wanted to detect known objects in a video, you’d have to stream it to your backend, where some powerful hardware would run inference (i.e. detect any objects present) and inform the device of the results.

This works just fine for many applications, but what if we could do without the server and stay on-device?

TensorFlow Lite makes it possible to run high performance inference at the edge, which enables some amazing new experiences.

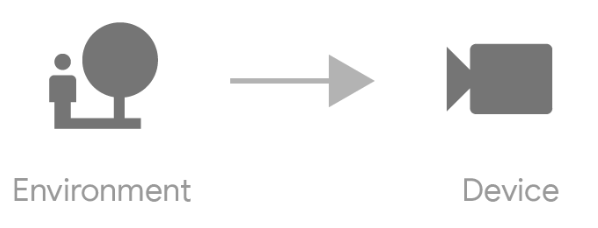

Offline inference

With edge inference, you no longer rely on an Internet connection. If internet is slow or expensive, you can build smart sensors that understand what they see and only transmit the interesting parts.

This means you can deploy ML in totally new situations and places. Imagine a smart camera that identifies wildlife in remote locations, or an imaging device that helps make medical diagnoses in areas far from wireless infrastructure.

|

| Monitoring wildlife with smart cameras |

Minimal latency

Sending data to a server involves a round-trip delay, which gets in the way when working with real-time data. This is no longer an issue when our model is at the edge!

When inference is super fast we can solve problems that depend on performance, like adding real-time object tracking to a robot that navigates human spaces, or synthesizing audio during a live musical performance.

|

| Synthesizing audio during a live performance |

Privacy and security

When data stays at the edge, users benefit from increased privacy and security, since personal information never leaves their devices.

This enables new, privacy-conscious applications, like security cameras that only record video when a likely threat is detected, or health devices that analyze personal metrics without sending data to the cloud.

|

| Analyzing health metrics without sending data to the cloud |

Performance

All of these new applications depend on high-performance inference that is only possible with hardware acceleration by the Edge TPU. But how much does this really speed things up?

In our internal benchmarks using different versions of MobileNet, a robust model architecture commonly used for image classification on edge devices, inference with Edge TPU is 70 to 100 times faster than on CPU.

Using MobileNets for face detection we’ve run inference at up to 70 to 100 frames per second, and for food recognition we’ve achieved 200 frames per second. This is enough for better-than-real-time inference of video, even when running several models simultaneously.

The following table outlines performance of the Coral USB Accelerator on various models.

* Desktop CPU: 64-bit Intel(R) Xeon(R) E5–1650 v4 @ 3.60GHz

* Desktop CPU: 64-bit Intel(R) Xeon(R) E5–1650 v4 @ 3.60GHz

** Embedded CPU: Quad-core Cortex-A53 @ 1.5GHz

† Dev Board: Quad-core Cortex-A53 @ 1.5GHz + Edge TPU

All tested models were trained using the ImageNet dataset with 1,000 classes and an input size of 224x224, except for Inception v4 which has an input size of 299x299.

Get started with Coral and TensorFlow Lite

Coral is a new platform, but it’s designed to work seamlessly with TensorFlow. To bring TensorFlow models to Coral you can use

TensorFlow Lite, a toolkit for running machine learning inference on edge devices including the Edge TPU, mobile phones, and microcontrollers.

Pre-trained models

The Coral website provides

pre-trained TensorFlow Lite models that have been optimized to use with Coral hardware. If you’re just getting started, you can just download a model, deploy it to your device, and instantly begin running image classification or object detection for various classes of objects using Coral’s

API demo scripts.

Retrain a model

You can customize Coral’s pre-trained machine learning models to recognize your own images and objects, using a process called transfer learning. To do this, follow the instructions in

Retrain an existing model.

Build your own TensorFlow model

If you have an existing TensorFlow model, or you’d like to train one from scratch, you can follow the steps in

Build a new model for the Edge TPU. Note that your model must meet the

Model requirements.

To prepare your model for the Edge TPU, you’ll first convert and optimize it for edge devices with the

TensorFlow Lite Converter. You’ll then compile the model for the Edge TPU with the

Edge TPU Model Compiler.

Quantization and optimization

The Edge TPU chips that power Coral hardware are designed to work with models that have been quantized, meaning their underlying data has been compressed in a way that results in a smaller, faster model with minimal impact on accuracy.

The TensorFlow Lite Converter can perform quantization on any trained TensorFlow model. You can read more about this technique in

Post-training quantization.

The following code snippet shows how simple it is to convert and quantize a model using TensorFlow Lite nightly and TensorFlow 2.0 alpha:

# Load TensorFlow

import tensorflow as tf

# Set up the converter

converter = tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

# Perform conversion and output file

tflite_quant_model = converter.convert()

output_dir.write_bytes(tflite_quant_model)

Next steps

We’ve heard how you can use Coral and TensorFlow Lite to build brand new experiences that bring AI to the edge. If you’re looking to get started, here’s how:

Feeling inspired? We’re excited to see what our community builds with Coral and TensorFlow Lite! Share your ideas on Twitter with the hashtags #withcoral and #poweredbyTF.