مارچ 06, 2019 —

Posted by Alex Ingerman (Product Manager) and Krzys Ostrowski (Research Scientist)

There are an estimated 3 billion smartphones in the world, and 7 billion connected devices. These phones and devices are constantly generating new data. Traditional analytics and machine learning need that data to be centrally collected before it is processed to yield insights, ML models and ultimately better produ…

|

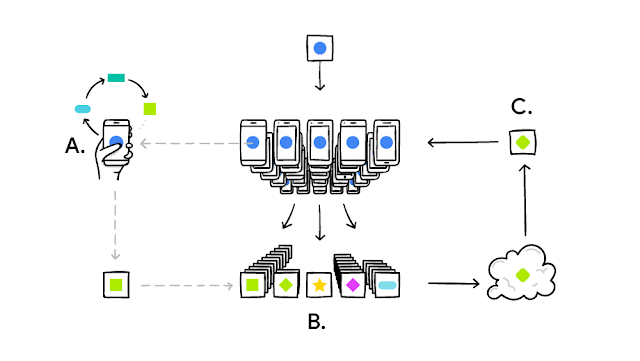

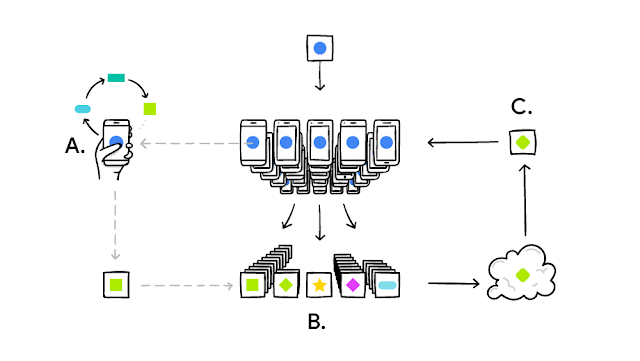

| TensorFlow Federated enables developers to express and simulate federated learning systems. Pictured here, each phone trains the model locally (A). Their updates are aggregated (B) to form an improved shared model (C). |

# Load simulation data.

source, _ = tff.simulation.datasets.emnist.load_data()

def client_data(n):

dataset = source.create_tf_dataset_for_client(source.client_ids[n])

return mnist.keras_dataset_from_emnist(dataset).repeat(10).batch(20)

# Wrap a Keras model for use with TFF.

def model_fn():

return tff.learning.from_compiled_keras_model(

mnist.create_simple_keras_model(), sample_batch)

# Simulate a few rounds of training with the selected client devices.

trainer = tff.learning.build_federated_averaging_process(model_fn)

state = trainer.initialize()

for _ in range(5):

state, metrics = trainer.next(state, train_data)

print (metrics.loss)tf.float32) and where that data lives (on distributed clients).READINGS_TYPE = tff.FederatedType(tf.float32, tff.CLIENTS)@tff.federated_computation(READINGS_TYPE)

def get_average_temperature(sensor_readings):

return tff.federated_average(sensor_readings) |

| An illustration of the get_average_temperature federated computation expression. |

@tff.federated_computation(

tff.FederatedType(DATASET_TYPE, tff.CLIENTS),

tff.FederatedType(MODEL_TYPE, tff.SERVER, all_equal=True),

tff.FederatedType(tf.float32, tff.SERVER, all_equal=True))

def federated_train(client_data, server_model, learning_rate):

return tff.federated_average(

tff.federated_map(local_train, [

client_data,

tff.federated_broadcast(server_model),

tff.federated_broadcast(learning_rate)]))

مارچ 06, 2019

—

Posted by Alex Ingerman (Product Manager) and Krzys Ostrowski (Research Scientist)

There are an estimated 3 billion smartphones in the world, and 7 billion connected devices. These phones and devices are constantly generating new data. Traditional analytics and machine learning need that data to be centrally collected before it is processed to yield insights, ML models and ultimately better produ…