https://blog.tensorflow.org/2019/08/introducing-tf-gan-lightweight-gan.html?hl=ca

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEggpaQy9tuYlHQN4hPJnqbI_fBrFxuZNKFPtQi6ow7dxdA-cst6dF5WoJhjG-V2_S425k6V0SJEBwWVIibHlCR6xbMlr8dTpiUnZLHbd6skRhrFOXNzZN0aIz3MQVMChBVuNLwNKgQtIos/s1600/tfg1.png

Posted by Joel Shor and Yoel Drori (Google Research Tel Aviv), Aaron Sarna (Google Research Cambridge), David Westbrook (Google, New York), and Paige Bailey (Google, Mountain View)

The software libraries we use for machine learning are often essential to the success of our research, and it’s important for our libraries to be updated at a rate that reflects the fast pace of machine learning research.

In 2017 we

announced TF-GAN, a lightweight library for training and evaluating Generative Adversarial Networks (GANs). Since that time, TF-GAN has been used in a number of

influential papers and projects.

Today, we announce a new version of TF-GAN. It has a number of upgrades and new features:

- Cloud TPU support: You can now use TF-GAN to train GANs on Google’s Cloud TPUs. Tensor Processing Units (TPUs) are Google’s custom-developed application-specific integrated circuits (ASICs) used to accelerate machine learning workloads. Models that previously took weeks to train on other hardware platforms can converge in hours on TPUs. This open source example demonstrates training an image-generation GAN on ImageNet on TPU, which we discuss in more detail below. You can also run a TF-GAN on TPU tutorial in colaboratory for free.

- Self-study GAN course: Machine learning works best when knowledge is freely available. To that end, we’ve released a self-study GAN course based on the GAN courses already taught internally at Google for years. Watching the videos, reading the descriptions, following the exercising, and doing the code examples are good steps on your road to ML mastery.

- GAN metrics: Academic papers sometimes “invent a ruler”, then use it to measure their results. To facilitate comparing results across papers, TF-GAN has made using standard metrics even easier. In addition to correcting for numerical precision and statistical biases that sometimes plague even the standard open-source implementations, TF-GAN metrics are computationally-efficient and syntactically easy to use.

- Examples: GAN research is incredibly fast-paced. While TF-GAN doesn’t intend to keep working examples of all GAN models, we have added some that we think are relevant, including a Self-Attention GAN that trains on TPU.

- PyPi package: TF-GAN can now be installed with ‘pip install tensorflow-gan’ and used with ‘import tensorflow_gan as tfgan’. Simple, right?

- Colaboratory tutorials: We’ve improved our tutorials. They can now be used with Google’s free GPUs and TPUs.

- GitHub Repository: TF-GAN is now in it’s own repository. This makes it easier to track changes and properly give credit to open-source contributors.

- TensorFlow 2.0: TF-GAN is currently TF 2.0 compatible, but we’re continuing to make it compatible with Keras. You can find some GAN Keras examples that don’t use TF-GAN at tensorflow.org, including DCGAN, Pix2Pix, and CycleGAN.

Projects using TF-GAN

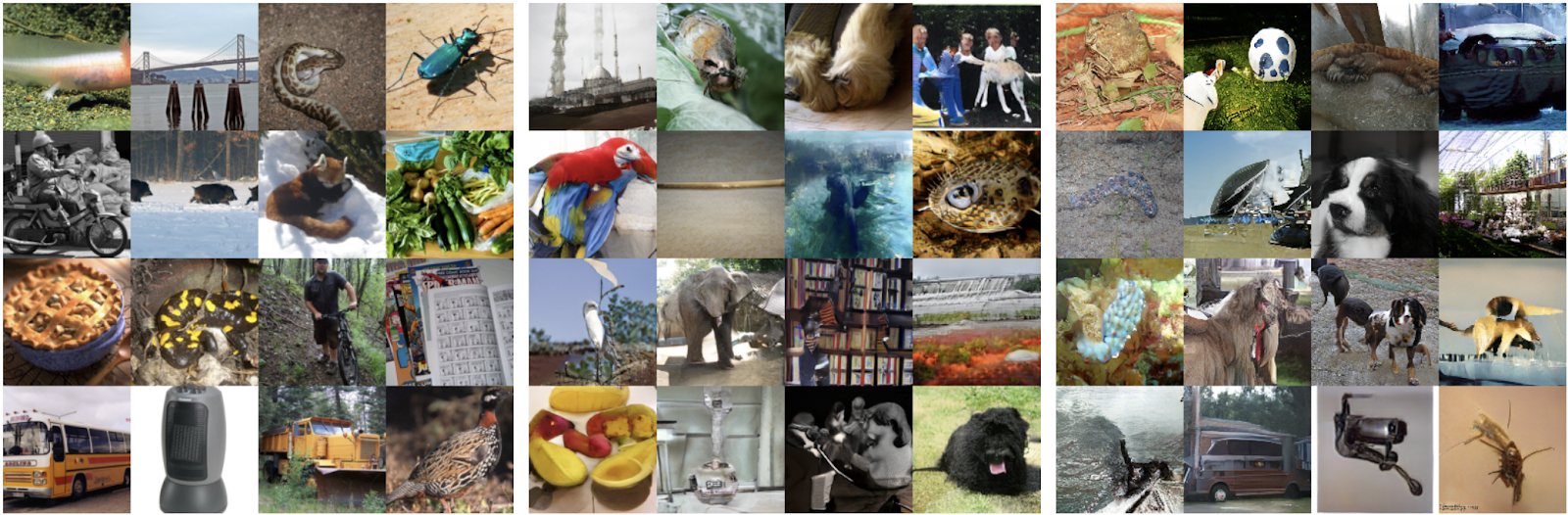

Self-Attention GAN on Cloud TPUs

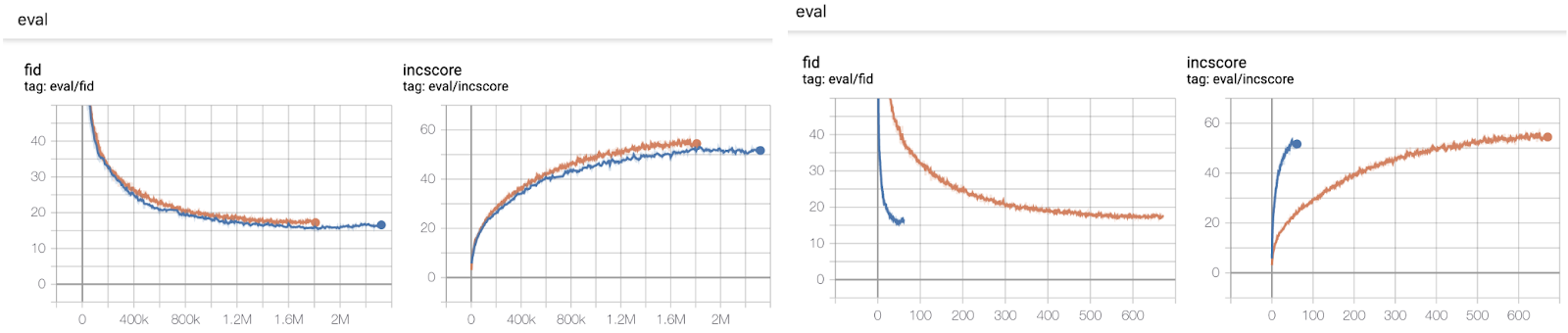

Self-attention GANs achieved state-of-the-art results on image generation using two metrics, the

Inception Score and the

Frechet Inception Distance. We open sourced two versions of this model, one of which runs in open source on Cloud TPUs. The TPU version performs the same as the GPU one, but trains 12 times faster.

|

| Left: Real Middle: Generated (GPU) Right: Generated (TPU) |

|

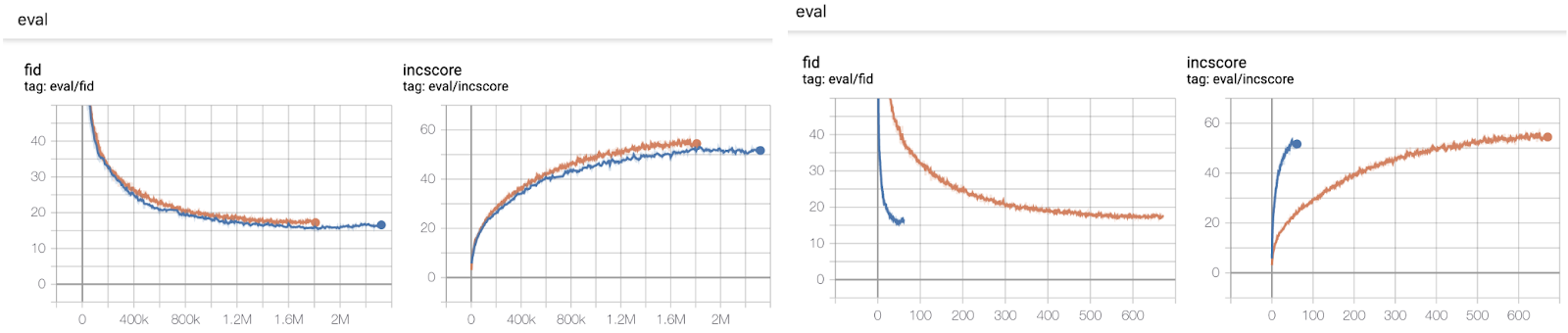

| Left: Frechet Inception Distance and Inception score as a function of training step. Right: Frechet Inception Distance and Inception score as a function of training time. |

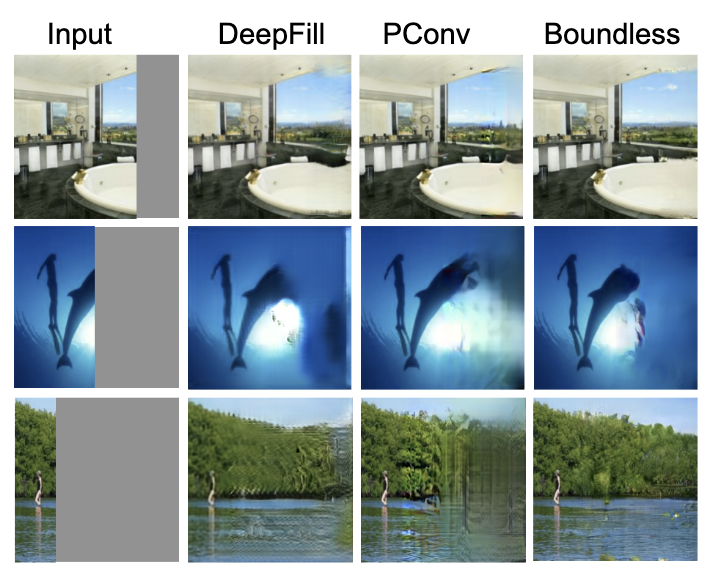

Image Extension

Image inpainting, where a missing part of an image is filled in based on surrounding context, is a well-studied problem. The related problem, image extension, is less explored. Image extension requires that an algorithm extend an image outside its boundaries in a plausible and consistent way. This is useful in virtual reality environments, where it is often necessary to simulate different camera characteristics, and in computational photography applications such as panorama stitching, where different images need to be smoothly stitched together. Google research engineers recently developed a

new algorithm that extends images with fewer artifacts than previous methods, and trained it using TPUs.

We took drone footage of the beautiful Charles River and applied our uncrop technique (to appear at ICCV 2019) to expand the field of the view:

|

| Some examples of image extension: The new method, using TF-GAN, is in the right column. It generates better object shapes (top/middle rows) and produces good textures (middle/bottom rows), compared with two state of the art inpainting methods: DeepFill and PConv. The input image is extended onto the masked area (shown in gray, left column). |

BigGAN

The DeepMind research team improved state-of-the-art image generation in

this paper, using a combination of architectural changes, a larger network, larger batch sizes, and Google TPUs. They used TF-GAN’s evaluation module to standardize their metrics, and were able to demonstrate quality improvements across a wide range of image sizes. Pretrained BigGAN generators are available on

TF Hub.

GANSynth

Researchers used TF-GAN to create

GANSynth, a GAN neural network capable of producing musical notes. The notes are more realistic than previous work. Due to the GAN latent space, GANSynth is able to generate the same note while smoothly interpolating between other properties such as instrument.

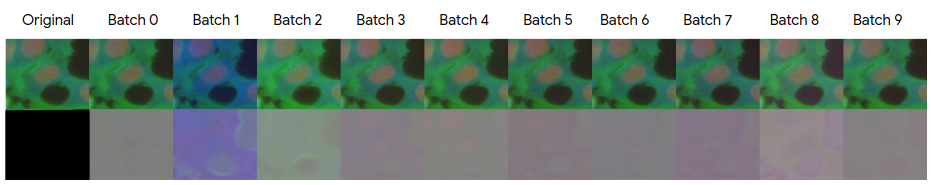

Microscope image normalization with GANs

Science experiments on lab-grown cells are usually conducted over many weeks. During that long timeframe, laboratory conditions can accidentally change. This can make microscope-based cell images vary dramatically from week to week, even if the underlying cells are the same, which is bad for later analysis. Scientists at Google used TF-GAN to create an “Equalizer” to mediate unwanted changes on cell images while preserving the relevant properties.

|

| The image shows the output of the Equalizer applied to one cell slide image morphed into each of the 9 weeks of the experiment. Some slide properties are changed, such as average background color, but properties like ‘number of cells in the image’ remain the same. |