https://blog.tensorflow.org/2019/09/body-movement-recognition-in-smart-baduanjin-app.html?hl=hi

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhNJO-XX9UV5EhidnvBsyoaGZkRxOwgUe1_moxSIzJGZHNEE2GRjB6ncaankTEKSycYekPI9Lnr8_Rdljydx9rDFKsjNL3kBK5j92BWyQHPilqT49RrBuHlOxQ4tQ8ivty-c3dAf0YOrRQ/s1600/pic1.png

Guest post by Keith Chan and Vincent Zhang from OliveX Engineering team

Introduction

OliveX is a Hong Kong-based company focused on fitness-related software, serving more than 2 million users since we first launched in 2018. Many of our users are elderly and our Baduanjin app helps them practice Baduanjin while minimizing the possibility of injury. To achieve that, we utilize the latest artificial intelligence technology in our app to automatically detect Baduanjin practicing moves and provide corresponding feedback to our users.

Goals and requirements

Baduanjin is a popular exercise that consists of eight kinds of limb movements and controlled breathing. Precise movements and control are critical for Baduanjin, which improves the health of the body both mentally and physically.

By using the “Smart Baduanjin” app, users can determine if they are performing the moves correctly by using AI to track their movements. By leveraging the latest machine learning technology, we hope to replace the traditional learning approach in which users simply follow an exercise video with a more enjoyable interactive experience in which users get feedback on their body movements in real time. We also hope that these features could help the elderly to practice Baduanjin more effectively and reduce the risk of injury.

After analyzing and prioritizing our goals, we defined the overall product requirements:

- Our uses usually perform Baduanjin in an outdoor setting, so our product needs to be ‘mobile’

- When a user practices Baduanjin, he/she usually needs to follow a demo video and imitate the movements from a coach, so our product should be able to play videos with sound

- To provide valuable real time feedback to our users, we need to capture user body movements with the front-facing camera

Specifically regarding the body movement recognition, we want our algorithms to be able to do the following:

- Recognize each Baduanjin movement in the overall sequence

- Give scores based on the correctness of a user’s body movements

- Provide correctional guidance on unsatisfactory movements

Technical analysis

ML framework selection

Based on the requirements above, we needed to select the right deep learning framework to implement our project. In particular, we were looking for a framework that has:

- Strong support for mobile devices and can run smoothly, even on mid- to low-end smartphones

- A friendly API design and rich debugging tools

- Mature community support and resources available

After comprehensive investigation and evaluation, we found that TensorFlow Lite satisfied our needs and requirements. In addition, Google open sourced

PoseNet, an app specifically designed for detecting human body poses, and provided demo code based on TensorFlow.js (EDITOR: we have recently released a

PoseNet sample based on TensorFlow Lite). Google not only helped us finish the initial work for human body pose recognition with the help of the open source code, but also convinced us that our action recognition algorithm could work on mobile devices, since the performance on JavaScript was so good.

Algorithms

Body movement recognition

In the initial stage of our development, we investigated existing body movement recognition algorithms. Currently, mainstream algorithms are mostly based on analyzing sequential video frames. Although these algorithms could meet our requirements, the networks are fairly complicated and running inference on them consumes a lot of computational resources. But since one of our hard requirements was to run the model on mobile devices, we had to make a tradeoff between accuracy and performance.

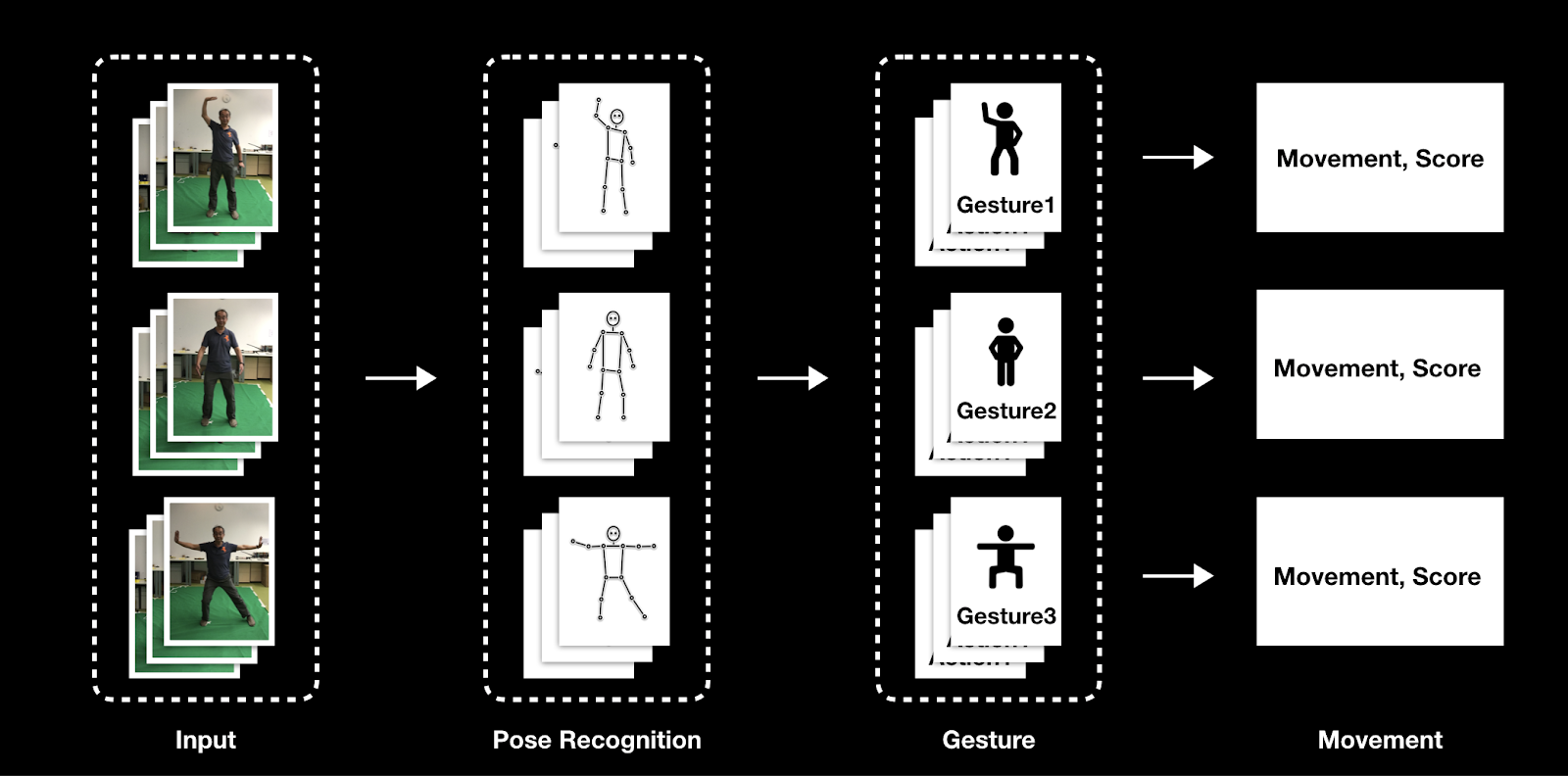

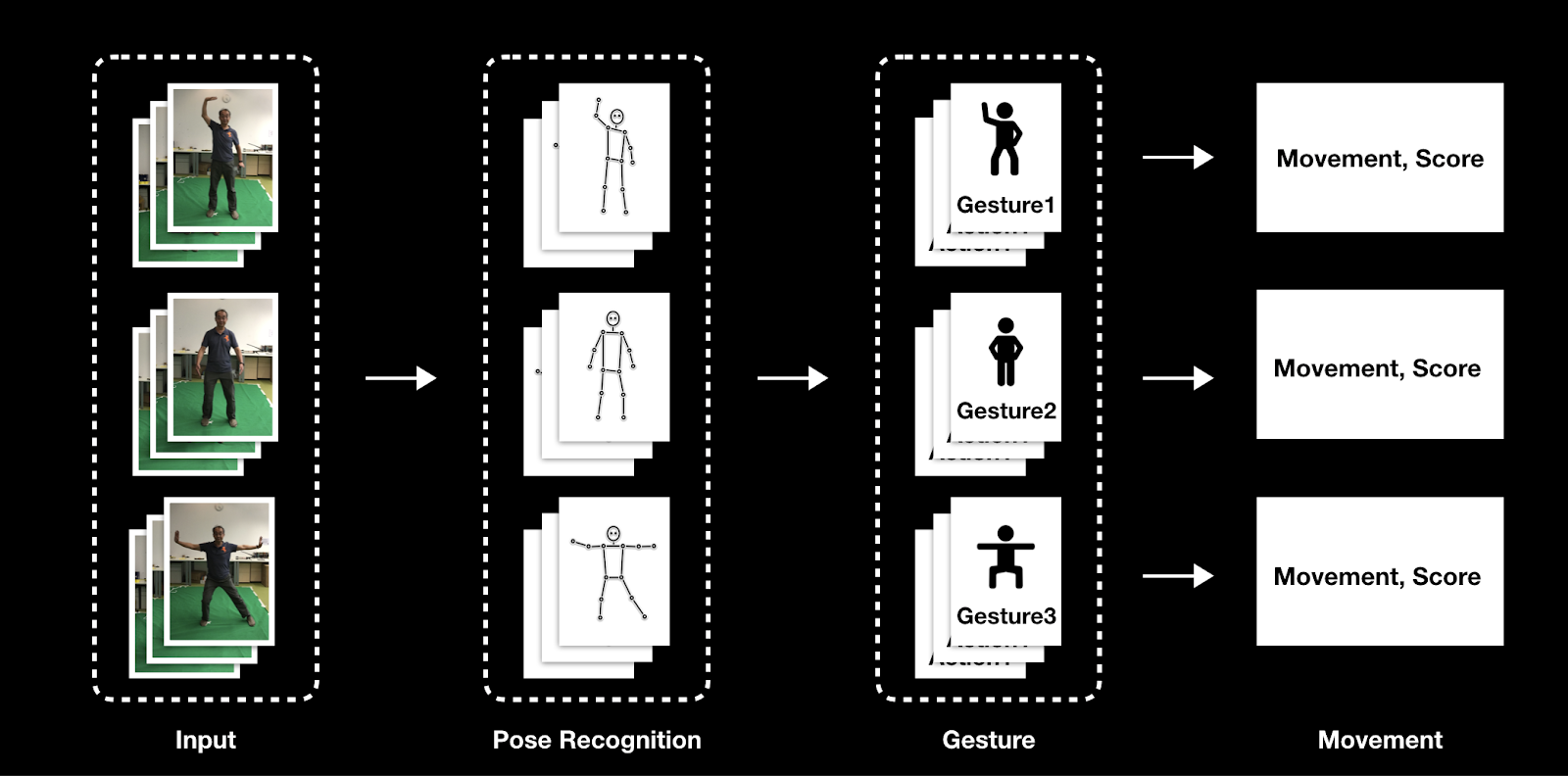

Our approach was to first acquire key human body joints through PoseNet, and then recognize specific actions based on the sequence of body joint movement. Since PoseNet tracks only 17 body joints, the amount of computation was significantly reduced, as compared to the full size image. Below we show the workflow of our algorithm: first we used PoseNet to extract body joint data from input video, and then we carried out action classifications based on the body joint data.

Key actions

After deciding on the technical approach, we needed to define the key body movements important to recognize for the app. To do this, we transformed the body movement recognition problem to a typical machine learning classification problem. Below is a sample of how we defined the key Baduanjin movements:

We trained a traditional deep neural network to classify user body movements. After several iterations of hyperparameter tuning and training data optimization/augmentation, our final model had good accuracy and was able to satisfy our product requirements, as shown below.

Challenges on mobile devices

After finishing the deep learning model, our next step was to deploy our models on iOS and Android mobile devices. At first, we tried TensorFlow Mobile. But since we needed to get recognition results in real time, TensorFlow Mobile was not a viable option since its performance did not meet this requirement.

As we were trying to solve the performance challenge, Google released

TensorFlow Lite, which was a big leap from TensorFlow Mobile in terms of performance. We compare the two offerings below:

We also show the initial benchmark results on our model below:

Based on the benchmark numbers, we concluded that it wasn’t feasible to do body movement recognition in real time on most Android devices based on a 512x512 input size. To solve this problem, we profiled our machine learning models and found that PoseNet was the bottleneck, which consumed 95% of the computational time. So we adjusted PoseNet input size and hyperparameters, and retrained our action recognition algorithm to compensate for the accuracy loss introduced by reduced input size. In the end, we chose 337x337 RGB as the input and 0.5 as the width multiplier for MobileNet on Android.

Our target users are mostly elderly, so they tend to have lower-end devices. Even though we improved the performance by adjusting PoseNet parameters, it was still not satisfactory. Therefore, we resorted to the ubiquitous accelerator in smartphones: GPU. Google happened to release

TensorFlow Lite GPU delegate (experimental) around that time, which saved us plenty of engineering resources.

Mobile GPU considerably accelerated our model execution. Below is our benchmark on several popular devices among our users, with and without GPU delegate.

Since Baduanjin moves are relatively slow for older users, our product could run smoothly on most devices after integrating TensorFlow Lite GPU delegate (experimental).

Conclusion

We successfully finished our Smart Baduanjin product on both iOS and Android, and have received positive feedback from our testing users. By leveraging ML technology and TensorFlow, we provided a ‘tutorial model’ for Baduanjin beginners so that they can follow the demo video and learn the moves. For experienced Baduanjin practitioners, we provided valuable feedback such as scores to help them further improve their skills. Currently, ‘Smart Baduanjin’ is available for free in both the

App Store and

Google Play.

Meanwhile, OliveX is actively exploring applying human body pose estimation to other fitness exercises. We found that many other exercise practices are just like Baduanjin, in that the correctness of the practitioners’ body movements matters a lot. Correct body movements not only help people avoid bodily injury, but also increase their exercise efficiency. Thus, we want to transfer the knowledge and skills we obtained from the Baduanjin project to other domains as well.

We trained a traditional deep neural network to classify user body movements. After several iterations of hyperparameter tuning and training data optimization/augmentation, our final model had good accuracy and was able to satisfy our product requirements, as shown below.

We trained a traditional deep neural network to classify user body movements. After several iterations of hyperparameter tuning and training data optimization/augmentation, our final model had good accuracy and was able to satisfy our product requirements, as shown below.

We also show the initial benchmark results on our model below:

We also show the initial benchmark results on our model below:

Based on the benchmark numbers, we concluded that it wasn’t feasible to do body movement recognition in real time on most Android devices based on a 512x512 input size. To solve this problem, we profiled our machine learning models and found that PoseNet was the bottleneck, which consumed 95% of the computational time. So we adjusted PoseNet input size and hyperparameters, and retrained our action recognition algorithm to compensate for the accuracy loss introduced by reduced input size. In the end, we chose 337x337 RGB as the input and 0.5 as the width multiplier for MobileNet on Android.

Based on the benchmark numbers, we concluded that it wasn’t feasible to do body movement recognition in real time on most Android devices based on a 512x512 input size. To solve this problem, we profiled our machine learning models and found that PoseNet was the bottleneck, which consumed 95% of the computational time. So we adjusted PoseNet input size and hyperparameters, and retrained our action recognition algorithm to compensate for the accuracy loss introduced by reduced input size. In the end, we chose 337x337 RGB as the input and 0.5 as the width multiplier for MobileNet on Android.

Since Baduanjin moves are relatively slow for older users, our product could run smoothly on most devices after integrating TensorFlow Lite GPU delegate (experimental).

Since Baduanjin moves are relatively slow for older users, our product could run smoothly on most devices after integrating TensorFlow Lite GPU delegate (experimental).