https://blog.tensorflow.org/2019/11/handtrackjs-tracking-hand-interactions.html?hl=zh_TW

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhzTNmDMJm5L2-wUUhbbwiGCTvh-MzhbiXghjOTyqMFo9CgsdbisYJZIzcaq3hFVyox4OWDPB0mBEQ_3SgShu6D0wJYREWguSziU3WL9nq5gcz9zmlrM_pY20uiP-E-VR2POLeKsWQL5tw/s1600/handtrackjs.gif

Handtrack.js: tracking hand interactions in the browser using Tensorflow.js and 3 lines of code

A guest post by Victor Dibia

As a researcher with interests in human computer interaction and applied machine learning, some of my work has focused on creating tools that leverage the human body as an input device for creating engaging user experiences.

As a part of this process, I created the

Handtrack.js library which allows developers to track a user’s hand using bounding boxes from an image in any orientation - in 3 lines of code. This project is a winning submission to the

#PoweredByTF 2.0 Challenge. Read more below to find out how it works, and how you can easily integrate the library into your own work.

|

| Here's an example interface built using Handtrack.js to track hands from webcam feed. Try the demo here. |

Until recently, tracking parts of the human body in real-time required special 3D sensors and high-end software. This high barrier to entry made building and deploying these kinds of interactive experiences a big challenge for most developers, especially in resource-constrained environments like the web browser. Luckily, advances in machine learning and the development of fast object detection models now give us the ability to perform tracking using only images from a camera. Combining these advancements with libraries like Tensorflow.js provides an efficient framework for deploying engaging, interactive experiences, and opens doors for people to experiment with object detection and hand-tracking directly in the browser. The Handtrack.js library is powered by TensorFlow.js, and gives developers the ability to quickly prototype hand and gesture interactions using a pre-trained hand detection model.

The goal of the Handtrack.js library is to abstract away steps associated with loading the model files, provide helpful functions, and allow a user to detect hands in an image without prior ML experience. No need to train a model (

you can if you want), and no need to export any frozen graphs or saved models. You can simply get started by including Handtrack.js in your web application and calling the library methods.

How was Handtrack.js built?

|

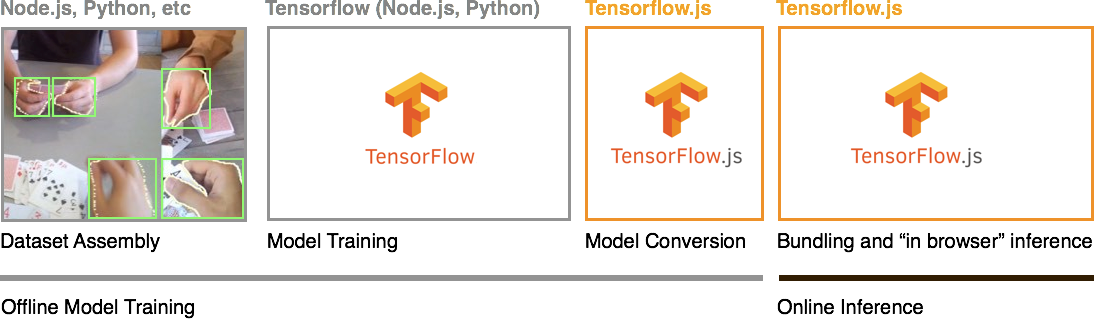

| Steps in creating a javascript library for machine learning in the browser. With Tensorflow.js, we import a model trained with Tensorflow python and bundle it into a library that can be called in web applications. |

Underneath, Handtrack.js is powered by a convolutional neural network (CNN) which performs the task of

object detection - given an image, can we identify the presence of objects (hands) and their location (bounding box). It was built using tools from the Tensorflow ecosystem - mainly

Tensorflow Python and

Tensorflow.js. Tensorflow.js allows developers to train models (online) directly in the browser or import pretrained models (offline) for inference in the browser.

Overall, the process of building Handtrack.js can be split into 4 main steps - data assembly, model training, model conversion and model bundling/inference. Note, that the first 3 steps which focus on training the model can be performed offline using various backend languages (e.g.

Tensorflow python and

Tensorflow node.js). The last step, which focuses on loading the model in the browser for online inference is implemented using Tensorflow.js.

1. Data Assembly: The

Egohands dataset consists of 4800 images of the human hand with bounding box annotations in various settings (indoor, outdoor, egocentric viewpoint), captured using a Google Glass device. Each image is annotated with polygons of locations of hands. Each polygon is first cleaned and then converted to a bounding box.

2. Model Training: Next, an object detection model is trained using the

Tensorflow Object Detection API . The SSD Mobilenet architecture which is optimized for speed and deployment in resource constrained environments was selected to support our low latency objective (more details on this process

here). Note that the training process is done using Tensorflow python code and can be run on your local GPU or using cloud computing resources such as Google Compute Engine, Google ML Engine, and Cloudera Machine Learning.

3. Model Conversion: The resulting trained model is then converted into a format that can be loaded in the browser for inference. Tensorflow.js offers a

model converter which enables the conversion of pretrained models to the Tensorflow.js webmodel format. It currently supports the following model formats: Tensorflow savedModel, Keras model and Tensorflow Hub modules. The Handtrack.js model is 18.5mb after conversion and is split into 5 model weight files and a manifest file.

4. Model Bundling: This step focuses on creating a

javascript library with main methods that load the converted model and perform inference (predictions) given an input image. Specifically, the Tensorflow.js

loadGraphModel method is used to load the converted model, while the

executeAsync method is used to perform inference. For ease of distribution, the library was also published on

npm - this way javascript developers who create their application with build tools can import the library package managers like npm or yarn. Furthermore, a minified version of the library is also available via

jsdelivr (jsdelivr is a cdn service which also automatically serves npm files).

Using Handtrack.js in a Web App

You can use Handtrack.js simply by including the library URL in a script tag or by importing it from npm, using javascript build tools. Below are some easy instructions to get started!

Using Script Tag

The Handtrack.js minified js file is currently hosted using jsdelivr, a free open source cdn that lets you include any npm package in your web application.

<!-- Load the handtrackjs model. -->

<script src="https://cdn.jsdelivr.net/npm/handtrackjs/dist/handtrack.min.js"> </script>

<!-- Replace this with your image. Make sure CORS settings allow reading the image! -->

<img id="img" src="hand.jpg"/>

<canvas id="canvas" class="border"></canvas>

<!-- Place your code in the script tag below. You can also use an external .js file -->

<script>

// Notice there is no 'import' statement. 'handTrack' and 'tf' is

// available on the index-page because of the script tag above.

const img = document.getElementById('img');

const canvas = document.getElementById('canvas');

const context = canvas.getContext('2d');

// Load the model.

handTrack.load().then(model => {

// detect objects in the image.

model.detect(img).then(predictions => {

console.log('Predictions: ', predictions);

});

});

</script>

The snippet above prints out bounding box predictions for an image passed in via the img tag. By submitting images from an img, video, canvas element, you can then “track” hands in each image (you will need to keep state of each hand as frames progress).

Using NPM

You can install Handtrack.js as an npm package using the following:

npm install --save handtrackjs

An example of how you can import and use it in a React app is given below.

import * as handTrack from 'handtrackjs';

const img = document.getElementById('img');

// Load the model.

handTrack.load().then(model => {

// detect objects in the image.

console.log("model loaded")

model.detect(img).then(predictions => {

console.log('Predictions: ', predictions);

});

});

In addition to the main methods to load the model and detect hands, Handtrack.js also provides other helper methods

- model.getFPS(): get FPS calculated as number of detections per second.

- model.renderPredictions(predictions, canvas, context, mediasource): draw bounding box (and the input mediasource image) on the specified canvas.

- model.getModelParameters(): returns model parameters.

- model.setModelParameters(modelParams): updates model parameters.

- dispose(): delete model instance

- startVideo(video): start camera video stream on given video element. Returns a promise that can be used to validate if user provided video permission.

- stopVideo(video): stop video stream.

For more information on the methods supported by the Handtrack.js library, please see the project’s API description on Github.

What has been built with Handtrack.js?

Handtrack.js was created to help developers quickly prototype engaging interfaces where users can interact using their hands, without worrying about the underlying machine learning models for hand detection or going through an involved installation process. Handtrack.js is useful for scenarios such as

- Replacing mouse control with hand movement e.g for interactive art installations.

- Combining hand tracking into higher order gestures e.g. using the distance between two detected hands to simulate pinch and zoom.

- When an overlap of hand and other objects can represent meaningful interaction signals (e.g a touch or selection event for an object).

- When hand motions can be a proxy for activity recognition (e.g. automatically tracking movement activity from a video or images of individuals playing chess, or tracking a user’s golf swing) - or simply counting how many humans are present in an image or video frame.

- For Machine Learning education as the Handtrack.js library provides a valuable interface which can be used to demonstrate how changes in the model parameters (confidence threshold, IoU threshold, image size, etc.) can affect detection results.

Below are some interesting projects that integrate Handtrack.js in building experiences.

Since its release,

Handtrack.js has been well received by the community (+1400 stars on Github) and the Handtrack.js npm library has been used in over

50 public projects hosted on Github. Some examples of community projects that utilize Handtrack.js include

- Molecular Playground project which is a system for displaying large-scale interactive molecules in prominent public spaces. Handtrack.js is used to allow users to interact with and rotate 3D visualizations of molecules by waving their hands.

- Jammer.js uses Handtrack.js to detect hands in browser and Hammer.js to recognize gestures, so you can add gesture-like hand gestures directly in your app e.g swipe, rotate, pinch and zoom.

- Programming an Air Guitar is a live coding tutorial on using Handtrack.js to create an air guitar (play sound notes by “touching” them on screen).

|

| Jammer.js - an example of interactive swiping designed using Handtrack.js |

A wide range of developers have used Handtrack.js including developers new to javascript, experienced javascript developers seeking to integrate ML in their applications, and ML engineers who have modified the Handtrack.js repo and bundled their own models for community use in javascript.

Limitations and Some Best Practices

The overall approach of deploying models in the browser is not without limitations. First, browsers are single-threaded, which means care should be taken to ensure that the main UI thread is not blocked at any given time. Secondly, image processing models can be large which may translate to slow page load speed and a poor user experience. For comparison, in web terms, a file size of 500kb is already considered large; the trained Handtrack.js model is 18.5mb.

A non-exhaustive list of some good practices to follow are offered below:

- Avoid expensive operations such as convolutions. Convolution operations are frequently used in the design of image processing models. While they work well in practice, they are computationally intensive and should be replaced with lighter operations such as depthwise separable convolutions.

- Remove unnecessary post processing operations. Some model graphs contain optional post processing operations which can increase inference latency. For example, excluding post processing operations from the model graph during conversion (as suggested in this Tensorflow.js example) resulted in an almost 2x speedup in inference speed for Handtrack.js.

- Explore a variety of approaches that help reduce the size of trained models. Examples include model quantization, model compression, and model distillation.

- Design to communicate long-running operations and limitations of ML models. It is important to explicitly inform the user of any background operations that may be long running (e.g showing spinners during model loading and inference). Similarly, it is useful to communicate model confidence estimates where available (the Handtrack.js API allows the developer to specify a minimum confidence threshold for returned results).

- Other best practices on designing with machine learning such as the People + AI Guidebook are also recommended.

Conclusion

Machine learning in the browser is an exciting and growing area. Using tools like Tensorflow.js, javascript developers can easily utilize trained Tensorflow models directly in the browser and craft engaging, interactive experiences. ML in the browser also brings attractive

benefits such as privacy, ease of distribution, and reduced latency (in some cases). With advances in active research areas such as model compression, model quantization, model distillation, it will become increasingly possible to efficiently run complex, highly accurate models on resource-constrained devices such as browsers. Furthermore, advances in web standards for accelerated computing (e.g

WebGPU,

WebAssembly) mean browser-based applications are set to become faster. All of these point to a future where machine learning models (much like 3D web graphics) can be efficiently performed in the browser.