https://blog.tensorflow.org/2020/02/how-modiface-utilized-tensorflowjs-in-ar-makeup-in-browser.html?hl=pt_BR

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiWEk0iof1UvJRHKKh1d1H9hqJuf-0msPZ_ZLtek5nCO65dkXwcXaN6ExtWfKVgA4vK-aw0GqNnf2A_-9LcXJmdpD0aZG2Kl1Y4gFGtcEzi_Tsnhyphenhyphenv2xld_FOxB-cRcMTLDG1gq8Q3pTic/s1600/virtualtryon.png

How Modiface utilized TensorFlow.js in production for AR makeup try on in the browser

Guest post by Jeff Houghton, Chief Operating Officer - Modiface Inc.

ModiFace has been creating artificial intelligence tech for the beauty industry for over a decade and began working on AR experiences before "Augmented Reality" was a household term. As smartphones hit the market, ModiFace quickly took advantage of the platform to switch from a virtual try on for a 2D image, to a virtual try on for a live 3D video. In 2018, ModiFace was acquired by the L'Oreal Group, and since then we’ve expanded our live virtual try on to be even more accessible by expanding our reach to the web using

TensorFlow.js.

|

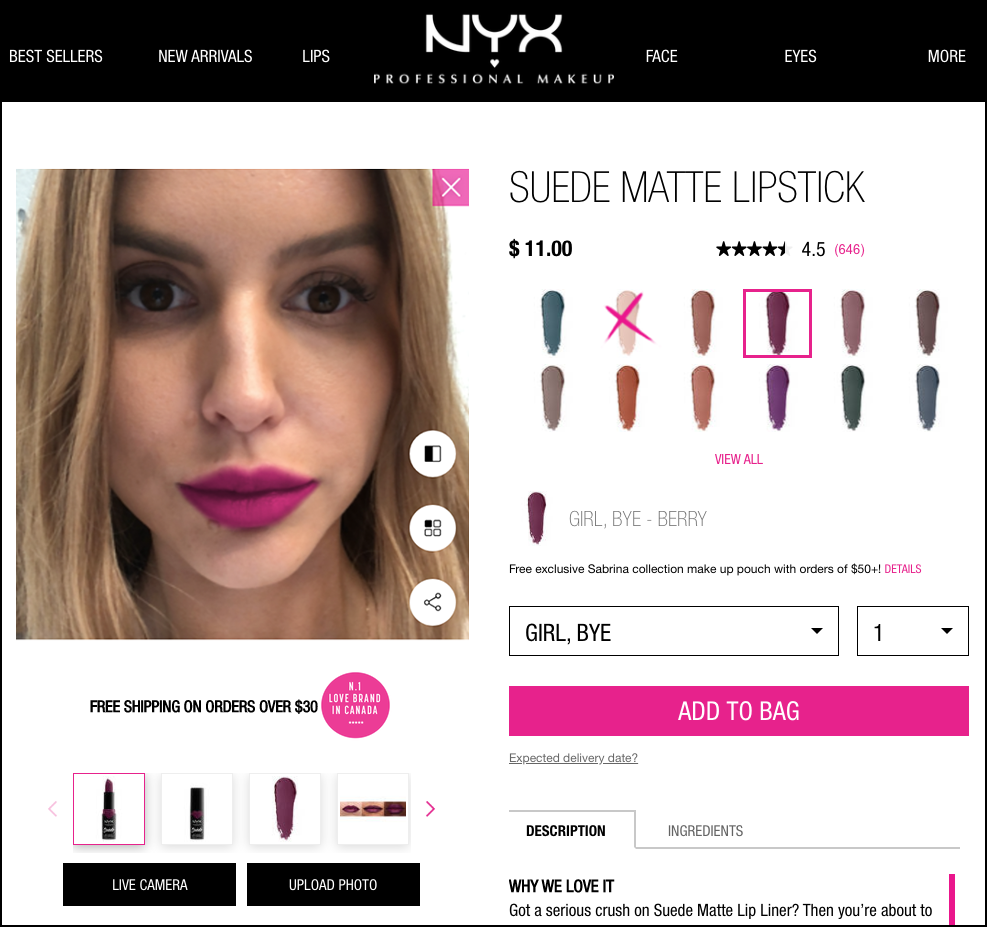

| A virtual try on available from L’Oreal Paris, easy to access from their website product pages. |

Users can simply visit a L’Oreal brand product page, and instead of just browsing through product photos, can actually see how the product will look on them before they purchase it. This process makes finding the right lipstick shade more fun and an experience you can do from the comfort of your own home. The solution is available with 10 brands in over 50 countries and counting, and the benefits for consumer’s are clear. Not only is it helping consumer’s confidently make a purchase, with a clear increase in conversions, but it is increasing user engagement as well.

Our previous implementation

Trying to create a fast, accurate, and lightweight AR experience can be very challenging. A few seconds of load time can determine whether the experience captures a user’s interest or whether they click away to view a photo instead.

Face trackers have historically been very large, and our best implementations were still around 5 MB after compression. These solutions used a

random forest to find a match for the face, so the smaller the implementation, the more accuracy that was sacrificed. While small, we had pushed the structure to its limit, and it still had a size that would take a while to load on slower networks - especially on mobile devices without WiFi. With less accuracy, edge cases for different face shapes became harder to track. Our original goal of launching virtual try on via the web was to make the experience accessible, and instead, it became an experience that was not as inclusive for everyone.

To improve our accuracy and create a product that we could continuously improve, we turned to more state-of-the art solutions such as using CNNs to find a user’s face. However, current popular architectures (including those we’ve used on other platforms) suffered from the same problems that existed in our previous experience; they were large and computationally expensive.

Our new solution

We focused our development on network structures which aim to maximize accuracy with less available computing power. Using

MobileNetV2, we greatly reduced the number of operations in the network at only a slight cost to performance. Using a residual design similar to ResNet also improved issues with training the network in larger structures.

|

| Our two-stage network architecture |

Our structure allowed us to use extremely small and computationally cheap heatmaps to focus processing in relevant areas of the image that will help improve accuracy at a lower cost. Furthermore, ROI align "zooms in" on our facial landmarks of interest, giving us the benefit of processing high resolution images with less impact on speed.

With a strong neural network in place, we needed to deploy it to production. Generally, when you think of deploying neural networks on the web, you think of server-side computation. This is not practical for live experiences due to the network latency of transferring each frame to the backend. As a result, a framework that could run neural networks in a web browser was needed.

One method of doing this is taking a traditional C++ execution framework and compiling it to either asm.js or WASM. However, the consequence of this is that compiled frameworks tend to be large in size and execute neural networks relatively slowly.

The other method is using a framework built for execution on the web like TensorFlow.js. TensorFlow.js has extensive operator support to ensure compatibility with our models. TensorFlow.js also takes advantage of WebGL to accelerate the execution of neural networks with a GPU to improve inference time. The overall application binary size is also significantly smaller.

The end product is an experience under 3 MB (compressed) that is ready before most users get their camera started. Additionally, our accuracy on various edge cases and facial expressions is greatly improved. We’ve also deployed this with more landmarks allowing us to expand the capability of our current renderings. This new structure gives us the ground to improve further with additional training without reworking the overall architecture.

TensorFlow.js not only allows client side rendering for applications that previously could only exist on powerful devices, but also comes with a host of other benefits. Without a backend to support the tool, it lets us deploy the try on easily, at a low cost across many L’Oreal brands. It also means a user’s image is fully in their control while maintaining privacy. To try on makeup, you do not have to send your photos and videos anywhere. Not only does this make for an experience users can trust, but it is also perfectly inline with the L’Oreal brand goals of transparency for their consumers.

ModiFace is focusing heavily on research and innovation that will not only empower consumers, but also provide seamless services that are simple to use. As we look to our next generation of beauty tech products, we expect TensorFlow.js to provide the backbone of this technology. TensorFlow.js gives our team the necessary tools to build amazing experiences, and combined with L’Oreal’s knowledge, beauty will become more and more digital every day.

Try it out yourself

Try some of our web based demos powered by TensorFlow.js for yourself right now in your web browser. Take your pick from any of the below:

- Maybelline enables you to build your own looks across their entire catalogue.

- Giorgio Armani Beauty allows you to see all their try on enabled products in one place. Click on the product page to try each shade!

- For Urban Decay’s Vice Lipstick, hover to try on over 100 different shades.

- L’Oreal Paris has a massive assortment of try on products. Explore their range of eyeshadows.

- NYX Cosmetics has instant looks to make finding a set of products super simple.