august 16, 2021 — Posted by Khanh LeViet, TensorFlow Developer Advocate and Yu-hui Chen, Software EngineerThe MoveNet iOS sample has been released. Check it out on GitHub. Since MoveNet’s announcement at Google I/O earlier this year, we have received a lot of positive feedback and feature requests. Today, we are excited to share several updates with you: The TensorFlow Lite version of MoveNet is now available on …

Posted by Khanh LeViet, TensorFlow Developer Advocate and Yu-hui Chen, Software Engineer

The MoveNet iOS sample has been released. Check it out on GitHub.

Since MoveNet’s announcement at Google I/O earlier this year, we have received a lot of positive feedback and feature requests. Today, we are excited to share several updates with you:

Pose estimation is a machine learning task that estimates the pose of a person from an image or a video by estimating the spatial locations of specific body parts (keypoints). MoveNet is the state-of-the-art pose estimation model that can detect these 17 key-points:

We have released two versions of MoveNet:

The MoveNet models outperform Posenet (paper, blog post, model), our previous TensorFlow Lite pose estimation model, on a variety of benchmark datasets (see the evaluation/benchmark result in the table below).

These MoveNet models are available in both the TensorFlow Lite FP16 and INT8 quantized formats, allowing maximum compatibility with hardware accelerators.

This version of MoveNet can recognize a single pose from the input image. If there is more than one person in the image, the model along with the cropping algorithm will try its best to focus on the person who is closest to the image center. We have also implemented a smart cropping algorithm to improve the detection accuracy on videos. In short, the model will zoom into the region where there’s a pose detected in the previous frame, so that the model can see the finer details and make better predictions in the current frame.

If you are interested in a deep-dive into MoveNet’s implementation details, check out an earlier blog post including its model architecture and the dataset it was trained on.

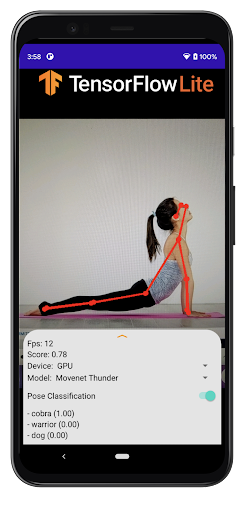

We have released new pose estimation sample apps for these platforms so that you can quickly try out different pose estimation models (MoveNet Lightning, MoveNet Thunder, Posenet) on the platform of your choice.

In the Android and iOS sample, you can also choose an accelerator (GPU, NNAPI, CoreML) to run the pose estimation models.

|

Screenshot of the Android sample app. The image is from Pixabay. |

We have optimized MoveNet to run well on hardware accelerators supported by TensorFlow Lite, including GPU and accelerators available via the Android NN API. This performance benchmark result may help you choose the runtime configurations that are most suitable for your use cases.

|

Model |

Size (MB) |

mAP* |

Latency (ms) ** |

||

|

Pixel 5 - |

Pixel 5 - GPU |

Raspberry Pi 4 - CPU 4 threads |

|||

|

MoveNet.Thunder (FP16 quantized) |

12.6MB |

72.0 |

155ms |

45ms |

594ms |

|

MoveNet.Thunder (INT8 quantized) |

7.1MB |

68.9 |

100ms |

52ms |

251ms |

|

MoveNet.Lightning (FP16 quantized) |

4.8MB |

63.0 |

60ms |

25ms |

186ms |

|

MoveNet.Lightning (INT8 quantized) |

2.9MB |

57.4 |

52ms |

28ms |

95ms |

|

PoseNet |

13.3MB |

45.6 |

80ms |

40ms |

338ms |

* mAP was measured on a subset of the COCO keypoint dataset where we filter and crop each image to contain only one person.

** Latency was measured end-to-end using the Android and Raspberry Pi sample apps with TensorFlow 2.5 under sustained load.

Here are some tips when deciding which model and accelerator to use:

While the pose estimation model tells you where the pose key points are, in many fitness applications, you may want to go further and classify the pose, for example whether it’s a yoga goddess pose or a plank pose, to deliver relevant information to your users.

To make pose classification easier to implement, we’ve also released a Colab notebook that teaches you how to use MoveNet and TensorFlow Lite to train a custom pose classification model from your custom pose dataset. It means that if you want to recognize yoga poses, all you need is to collect images of poses that you want to recognize, label them, and follow the tutorial to train and deploy a yoga pose classifier into your applications.

The pose classifier consists of two stages:

|

An example of pose classification using MoveNet. The input image is from Pixabay. |

In order to train a custom pose classifier, you need to prepare the pose images and put them into a folder structure as below. Each subfolder name is the name of the class you want to recognize. Then you can run the notebook to train a custom pose classifier and convert it to the TensorFlow Lite format.

yoga_poses

|__ downdog

|______ 00000128.jpg

|______ 00000181.bmp

|______ ...

|__ goddess

|______ 00000243.jpg

|______ 00000306.jpg

|______ ...

...

The pose classification TensorFlow Lite model is very small, only about 30KBs. It takes the landmarks output from MoveNet, normalizes the pose coordinates and feeds it through a few fully connected layers. The model output is a list of probabilities that the pose is each of the known pose types.

|

| Overview of the pose classification TensorFlow Lite model. |

You can try your pose classification model in any of the pose estimation sample apps for Android or Raspberry Pi that we have just released.

Our goal is to provide the core pose estimation and action recognition engine so that developers can build creative applications on top of it. Here are some of the directions that we are actively working on:

Please let us know via tflite@tensorflow.org or the TensorFlow Forum if you have any feedback or suggestions!

We would like to thank the other contributors to MoveNet: Ronny Votel, Ard Oerlemans, Francois Belletti along with those involved with the TensorFlow Lite: Tian Lin, Lu Wang.

august 16, 2021 — Posted by Khanh LeViet, TensorFlow Developer Advocate and Yu-hui Chen, Software EngineerThe MoveNet iOS sample has been released. Check it out on GitHub. Since MoveNet’s announcement at Google I/O earlier this year, we have received a lot of positive feedback and feature requests. Today, we are excited to share several updates with you: The TensorFlow Lite version of MoveNet is now available on …