noiembrie 15, 2021 — Posted by Valentin Bazarevsky,Ivan Grishchenko, Eduard Gabriel Bazavan, Andrei Zanfir, Mihai Zanfir, Jiuqiang Tang,Jason Mayes, Ahmed Sabie, Google Today, we're excited to share a new version of our model for hand pose detection, with improved accuracy for 2D, novel support for 3D, and the new ability to predict keypoints on both hands simultaneously. Support for multi-hand tracking was one …

Posted by Valentin Bazarevsky, Ivan Grishchenko, Eduard Gabriel Bazavan, Andrei Zanfir, Mihai Zanfir, Jiuqiang Tang, Jason Mayes, Ahmed Sabie, Google

Today, we're excited to share a new version of our model for hand pose detection, with improved accuracy for 2D, novel support for 3D, and the new ability to predict keypoints on both hands simultaneously. Support for multi-hand tracking was one of the most common requests from the developer community, and we're pleased to support it in this release.

You can try a live demo of the new model here. This work improves on our previous model which predicted 21 keypoints, but could only detect a single hand at a time. In this article, we'll describe the new model, and how you can get started.

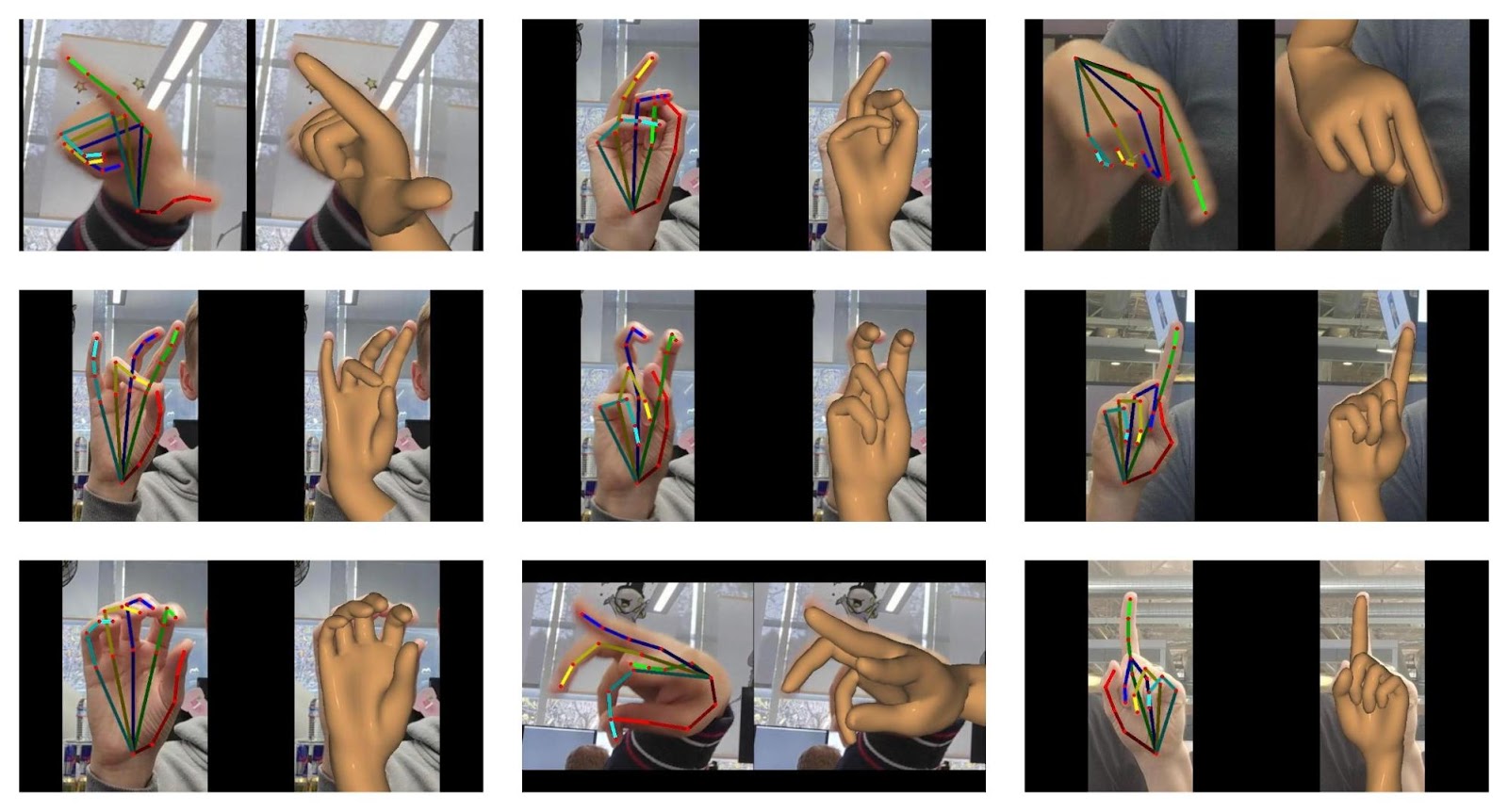

|

| The new hand pose detection model in action. |

1. The first step is to import the library. You can either use the <script> tag in your html file or use NPM:

Through script tag:

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/hand-pose-detection">>/script>

<!-- Optional: Include below scripts if you want to use MediaPipe runtime. -->

<script src="https://cdn.jsdelivr.net/npm/@mediapipe/hands"> </script > Through NPM:

yarn add @tensorflow-models/hand-pose-detection

# Run below commands if you want to use TF.js runtime.

yarn add @tensorflow/tfjs-core @tensorflow/tfjs-converter

yarn add @tensorflow/tfjs-backend-webgl

# Run below commands if you want to use MediaPipe runtime.

yarn add @mediapipe/handsIf installed through NPM, you need to import the libraries first:

import * as handPoseDetection from '@tensorflow-models/hand-pose-detection';Next create an instance of the detector:

const model = handPoseDetection.SupportedModels.MediaPipeHands;

const detectorConfig = {

runtime: 'mediapipe', // or 'tfjs'

modelType: 'full'

};

detector = await handPoseDetection.createDetector(model, detectorConfig);

Choose a modelType that fits your application needs, there are two options for you to choose from: lite, and full. From lite to full, the accuracy increases while the inference speed decreases.

2. Once you have a detector, you can pass in a video stream or static image to detect poses:

const video = document.getElementById('video');

const hands = await detector.estimateHands(video);

The output format is as follows: hands represent an array of detected hand predictions in the image frame. For each hand, the structure contains a prediction of the handedness (left or right) as well as a confidence score of this prediction. An array of 2D keypoints is also returned, where each keypoint contains x, y, and name. The x, y denotes the horizontal and vertical position of the hand keypoint in the image pixel space, and name denotes the joint label. In addition to 2D keypoints, we also return 3D keypoints (x, y, z values) in a metric scale, with the origin in auxiliary keypoint formed as an average between the first knuckles of index, middle, ring and pinky fingers.

[

{

score: 0.8,

Handedness: 'Right',

keypoints: [

{x: 105, y: 107, name: "wrist"},

{x: 108, y: 160, name: "pinky_finger_tip"},

...

]

keypoints3D: [

{x: 0.00388, y: -0.0205, z: 0.0217, name: "wrist"},

{x: -0.025138, y: -0.0255, z: -0.0051, name: "pinky_finger_tip"},

...

]

}

]

You can refer to our README for more details about the API.

The updated version of our hand pose detection API improves the quality for 2D keypoint prediction, handedness (classification output whether it is left or right hand), and minimizes the number of false positive detections. More details about the updated model can be found in our recent paper: On-device Real-time Hand Gesture Recognition.

Following our recently released BlazePose GHUM 3D in TensorFlow.js, we also added metric-scale 3D keypoint prediction to hand pose detection in this release, with the origin being represented by an auxiliary keypoint, formed as a mean of first knuckles for index, middle, ring and pinky fingers. Our 3D ground truth is based on a statistical 3D human body model called GHUM, which is built using a large corpus of human shapes and motions.

To obtain hand pose ground truth, we fitted the GHUM hand model to our existing 2D hand dataset and recovered real world 3D keypoint coordinates. The shape and the hand pose variables of the GHUM hand model were optimized such that the reconstructed model aligns with the image evidence. This includes 2D keypoint alignment, shape, and pose regularization terms as well as anthropometric joint angle limits and model self contact penalties.

|

| Sample GHUM hand fittings for hand images with 2D keypoint annotations overlaid. The data was used to train and test a variety of poses leading to better results for more extreme poses. |

In this new release, we substantially improved the quality of models, and evaluated them on a dataset of American Sign Language (ASL) gestures. As evaluation metric for 2D screen coordinates, we used Mean Average Precision (mAP) suggested by the COCO keypoint challenge methodology.

|

| Hand model evaluation on American Sign Language dataset |

For 3D evaluation we used Mean Absolute Error in Euclidean 3D metric space, with the average error measured in centimeters.

|

Model Name |

2D, mAP, % |

3D, mean 3D error, cm |

|

HandPose GHUM Lite |

79.2 |

1.4 |

|

HandPose GHUM Full |

83.8 |

1.3 |

|

Previous TensorFlow.js HandPose |

66.5 |

N/A |

We benchmark the model across multiple devices. All the benchmarks are tested with two hands presented.

|

MacBook Pro 15” 2019. Intel core i9. AMD Radeon Pro Vega 20 Graphics. (FPS) |

iPhone 11 (FPS) |

Pixel 5 (FPS) |

Desktop Intel i9-10900K. Nvidia GTX 1070 GPU. (FPS) |

|

|

MediaPipe Runtime With WASM & GPU Accel. |

62 | 48 |

8 | 5 |

19 | 15 |

136 | 120 |

|

TensorFlow.js Runtime |

36 | 31 |

15 | 12 |

11 | 8 |

42 | 35 |

To see the model’s FPS on your device, try our demo. You can switch the model type and runtime live in the demo UI to see what works best for your device.

In addition to the JavaScript hand pose detection API, these updated hand models are also available in MediaPipe Hands as a ready-to-use Android Solution API and Python Solution API, with prebuilt packages in Android Maven Repository and Python PyPI respectively.

For instance, for Android developers the Maven package can be easily integrated into an Android Studio project by adding the following into the project’s Gradle dependencies:

dependencies {

implementation 'com.google.mediapipe:solution-core:latest.release'

implementation 'com.google.mediapipe:hands:latest.release'

}

The MediaPipe Android Solution is designed to handle different use scenarios such as processing live camera feeds, video files, as well as static images. It also comes with utilities to facilitate overlaying the output landmarks onto either CPU images (with Canvas) or GPU (using OpenGL). For instance, the following code snippet demonstrates how it can be used to process a live camera feed and render the output on screen in real-time:

// Creates MediaPipe Hands.

HandsOptions handsOptions =

HandsOptions.builder()

.setModelComplexity(1)

.setMaxNumHands(2)

.setRunOnGpu(true)

.build();

Hands hands = new Hands(activity, handsOptions);

// Connects MediaPipe Hands to camera.

CameraInput cameraInput = new CameraInput(activity);

cameraInput.setNewFrameListener(textureFrame -> hands.send(textureFrame));

// Registers a result listener.

hands.setResultListener(

handsResult -> {

handsView.setRenderData(handsResult);

handsView.requestRender();

})

// Starts the camera to feed data to MediaPipe Hands.

handsView.post(this::startCamera);

To learn more about MediaPipe Android Solutions, please refer to our documentation and try them out with the example Android Studio project. Also visit MediaPipe Solutions for more cross-platform solutions.

We would like to acknowledge our colleagues who participated in or sponsored creating HandPose GHUM 3D and building the APIs: Cristian Sminchisescu, Michael Hays, Na Li, Ping Yu, George Sung, Jonathan Baccash, Esha Uboweja, David Tian, Kanstantsin Sokal, Gregory Karpiak, Tyler Mullen, Chuo-Ling Chang, Matthias Grundmann.

noiembrie 15, 2021 — Posted by Valentin Bazarevsky,Ivan Grishchenko, Eduard Gabriel Bazavan, Andrei Zanfir, Mihai Zanfir, Jiuqiang Tang,Jason Mayes, Ahmed Sabie, Google Today, we're excited to share a new version of our model for hand pose detection, with improved accuracy for 2D, novel support for 3D, and the new ability to predict keypoints on both hands simultaneously. Support for multi-hand tracking was one …