september 30, 2022 — Posted by Alan Kelly, Software EngineerWe are happy to share that TensorFlow Lite version 2.10 has optimized Reduce (All, Any, Max, Min, Prod, Sum) and Mean operators. These common operators replace one or more dimensions of a multi-dimensional tensor with a scalar. Sum, Product, Min, Max, Bitwise And, Bitwise Or and Mean variants of reduce are available. Reduce is now fast for all possible input…

Posted by Alan Kelly, Software Engineer

We are happy to share that TensorFlow Lite version 2.10 has optimized Reduce (All, Any, Max, Min, Prod, Sum) and Mean operators. These common operators replace one or more dimensions of a multi-dimensional tensor with a scalar. Sum, Product, Min, Max, Bitwise And, Bitwise Or and Mean variants of reduce are available. Reduce is now fast for all possible inputs.

|

| Benchmark for Reduce Mean on Google Pixel 6 Pro Cortex A55 (small core). Input tensor is 4D of shape [32, 256, 5, 128] reduced over axis [1, 3], Output is a 2D tensor of shape [32, 5]. |

|

| Benchmark for Reduce Prod on Google Pixel 6 Pro Cortex A55 (small core). Input tensor is 4D of shape [32, 256, 5, 128] reduced over axis [1, 3], Output is a 2D tensor of shape [32, 5]. |

|

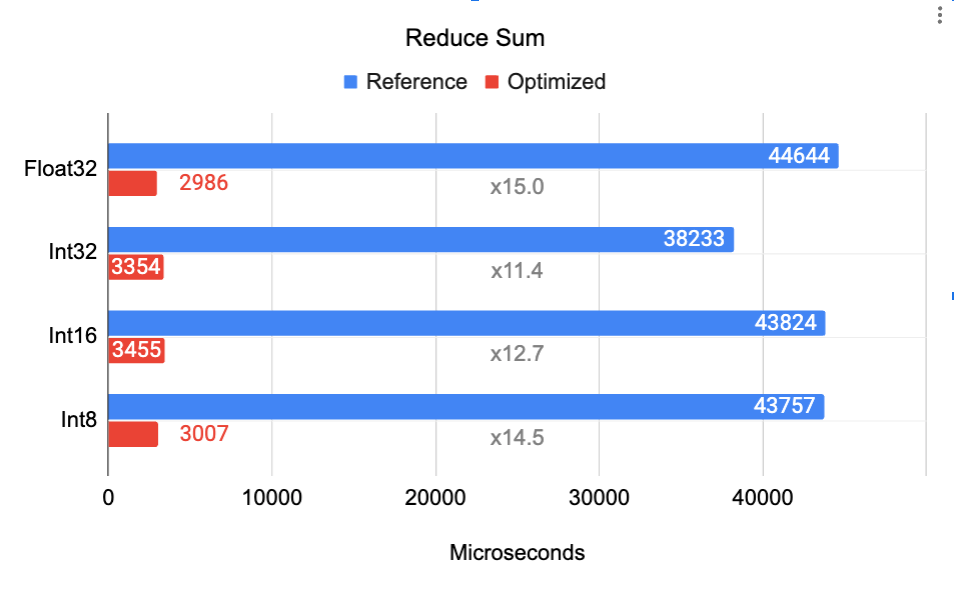

| Benchmark for Reduce Sum on Google Pixel 6 Pro Cortex A55 (small core). Input tensor is 4D of shape [32, 256, 5, 128] reduced over axis [0, 2], Output is a 2D tensor of shape [256, 128]. |

To understand how these improvements were made, we need to look at the problem from a different perspective. Let’s take a 3D tensor of shape [3, 2, 5].

september 30, 2022 — Posted by Alan Kelly, Software EngineerWe are happy to share that TensorFlow Lite version 2.10 has optimized Reduce (All, Any, Max, Min, Prod, Sum) and Mean operators. These common operators replace one or more dimensions of a multi-dimensional tensor with a scalar. Sum, Product, Min, Max, Bitwise And, Bitwise Or and Mean variants of reduce are available. Reduce is now fast for all possible input…