जून 20, 2023 — Posted by Angelica Willis and Akib Uddin, Health AI Team, Google ResearchHow researchers at Google are working to expand global access to maternal healthcare with the help of AITensorFlow Lite* is an open-source framework to run machine learning models on mobile and edge devices. It’s popular for use cases ranging from image classification, object detection, speech recognition, natural language t…

Posted by Angelica Willis and Akib Uddin, Health AI Team, Google Research

Posted by Angelica Willis and Akib Uddin, Health AI Team, Google Research

TensorFlow Lite* is an open-source framework to run machine learning models on mobile and edge devices. It’s popular for use cases ranging from image classification, object detection, speech recognition, natural language tasks, and more. From helping parents of deaf children learn sign language, to predicting air quality, projects using TensorFlow Lite are demonstrating how on-device ML could directly and positively impact lives by making these socially beneficial applications of AI more accessible, globally. In this post, we describe how TensorFlow Lite is being used to help develop ultrasound tools in under-resourced settings.

According to the WHO, complications from pregnancy and childbirth contribute to roughly 287,000 maternal deaths and 2.4 million neonatal deaths worldwide each year. As many as 95% of these deaths occur in under-resourced settings and many are preventable if detected early. Obstetric diagnostics, such as determining gestational age and fetal presentation, are important indicators in planning prenatal care, monitoring the health of the birthing parent and fetus, and determining when intervention is required. Many of these factors are traditionally determined by ultrasound.

Advancements in sensor technology have made ultrasound devices more affordable and portable, integrating directly with smartphones. However, ultrasound requires years of training and experience, and, in many rural or underserved regions, there is a shortage of trained ultrasonography experts, making it difficult for people to access care. Due to this global lack of availability, it has been estimated that as many as two-thirds of pregnant people in these settings do not receive ultrasound screening during pregnancy.

Google Research is building AI models to help expand access to ultrasound, including models to predict gestational age and fetal presentation, to allow health workers with no background in ultrasonography to collect clinically useful ultrasound scans. These models make predictions from ultrasound video obtained using an easy-to-teach operating procedure, a blind sweep protocol, in which a user blindly sweeps the ultrasound probe over the patient's abdomen. In our recent paper, “A mobile-optimized artificial intelligence system for gestational age and fetal malpresentation assessment”, published in Nature Communications Medicine, we demonstrated that, when utilizing blind sweeps, these models enable these non-experts to match standard of care performance in predicting these diagnostics.

|

| This blind-sweep ultrasound acquisition procedure can be performed by non-experts with only a few hours of ultrasound training. |

|

| Figure A compares our blind sweep-based gestational age regression model performance with that of the clinical standard of care method for fetal age estimation from fetal biometry measured by expert sonographers. Boxes indicate 25th, 50th, and 75th percentile absolute error in days, and whiskers indicate 5th and 95th percentile absolute error (n = 407 study participants). Figure B shows the Receiver Operating Characteristic (ROC) curves for our blind sweep-based fetal malpresentation classification model, as well as specific performance curves for cases in which blind sweeps were collected by expert sonographers or novices (n = 623 study participants). See our recent paper for further details and additional analysis. |

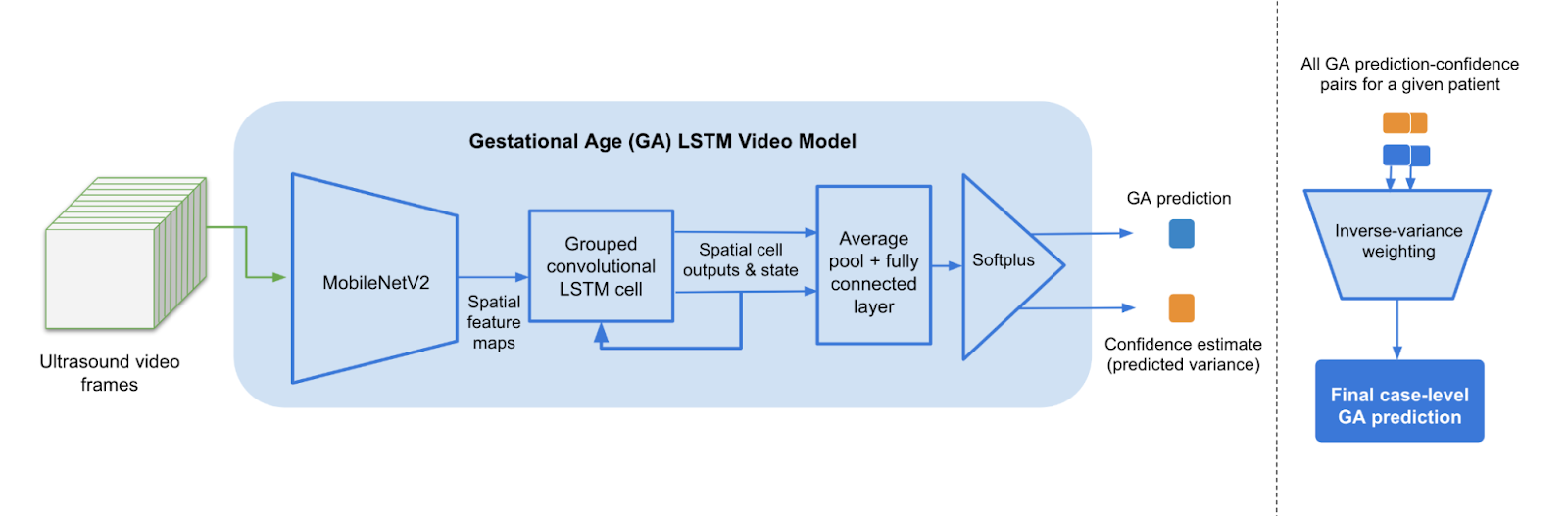

Understanding that our target deployment environment is one in which users might not have reliable access to power and internet, we designed these models to be mobile-optimized. Our grouped convolutional LSTM architecture utilizes MobileNetV2 for feature extraction on each video frame as it is received. The final feature layer produces a sequence of image embeddings which are processed by the convolutional LSTM cell state. Since the recurrent connections only operate on the less memory-intensive embeddings, this model can run efficiently in a mobile environment.

For each subsequence of video frames that make up a sweep, we generate a clip-level diagnostic result, and in the case of gestational age, also produce a model confidence estimate represented as the predicted variance in the detected age. Clip-level gestational age predictions are aggregated via inverse variance weighting to produce a final case-level prediction.

|

On-device ML has many advantages, including providing enhanced privacy and security by ensuring that sensitive input data never needs to leave the device. Another important advantage of on-device ML, particularly for our use case, is the ability to leverage ML offline in regions with low internet connectivity, including where smartphones serve as a stand-in for more expensive traditional devices. Our prioritization of on-device ML made TensorFlow Lite a natural choice for optimizing and evaluating the memory use and execution speed of our existing models, without significant changes to model structure or prediction performance.

After converting our models to TensorFlow Lite using the converter API, we explored various optimization strategies, including post-training quantization and alternative delegate configurations. Leveraging a TensorFlow Lite GPU delegate, optimized for sustained inference speed, provided the most significant boost to execution speed. There was a roughly 2x speed improvement with no loss in model accuracy, which equated to real-time inference of more than 30 frames/second with both the gestational age and fetal presentation models running in parallel on Pixel devices. We benchmarked model initialization time, inference time and memory usage for various delegate configurations using TensorFlow Lite performance measurement tools, finding the optimal configuration across multiple mobile device manufacturers.

These critical speed improvements allow us to leverage the model confidence estimate to provide sweep-quality feedback to the user immediately after the sweep was captured. When low-quality sweeps are detected, users can be provided with tips on how their sweep can be improved (for example, applying more pressure or ultrasound gel), then prompted to re-do the sweep.

Our vision is to enable safer pregnancy journeys using AI-driven ultrasound that could broaden access globally. We want to be thoughtful and responsible in how we develop our AI to maximize positive benefits and address challenges, guided by our AI Principles. TensorFlow Lite has helped enable our research team to explore, prototype, and de-risk impactful care-delivery strategies designed with the needs of lower-resource communities in mind.

This research is in its early stages and we look forward to opportunities to expand our work. To achieve our goals and scale this technology for wider reach globally, partnerships are critical. We are excited about our partnerships with Northwestern Medicine in the US and Jacaranda Health in Kenya to further develop and evaluate these models. With more automated and accurate evaluations of maternal and fetal health risks, we hope to lower barriers and help people get timely care.

This work was developed by an interdisciplinary team within Google Research: Ryan G. Gomes, Chace Lee, Angelica Willis, Marcin Sieniek, Christina Chen, James A. Taylor, Scott Mayer McKinney, George E. Dahl, Justin Gilmer, Charles Lau, Terry Spitz, T. Saensuksopa, Kris Liu, Tiya Tiyasirichokchai, Jonny Wong, Rory Pilgrim, Akib Uddin, Greg Corrado, Lily Peng, Katherine Chou, Daniel Tse, & Shravya Shetty.

Special thanks to: Yun Liu, Cameron Chen, Sami Lachgar, Lauren Winer, Annisah Um’rani, and Sachin Kotwani

*TensorFlow Lite has not been certified or validated for clinical, medical, or diagnostic purposes. TensorFlow Lite users are solely responsible for their use of the framework and independently validating any outputs generated by their project.

जून 20, 2023 — Posted by Angelica Willis and Akib Uddin, Health AI Team, Google ResearchHow researchers at Google are working to expand global access to maternal healthcare with the help of AITensorFlow Lite* is an open-source framework to run machine learning models on mobile and edge devices. It’s popular for use cases ranging from image classification, object detection, speech recognition, natural language t…