mars 30, 2018 —

Posted by Laurence Moroney, Developer Advocate

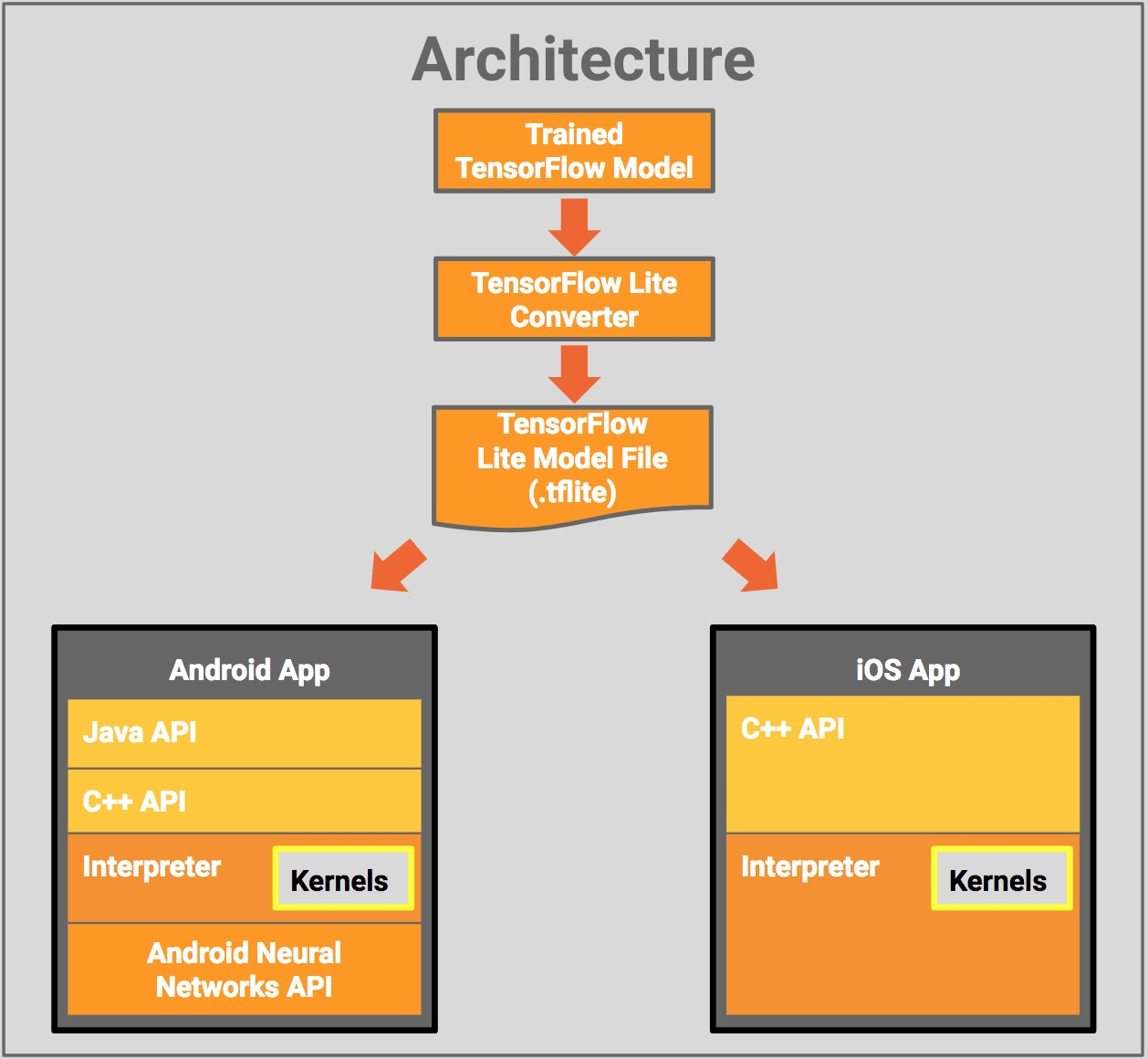

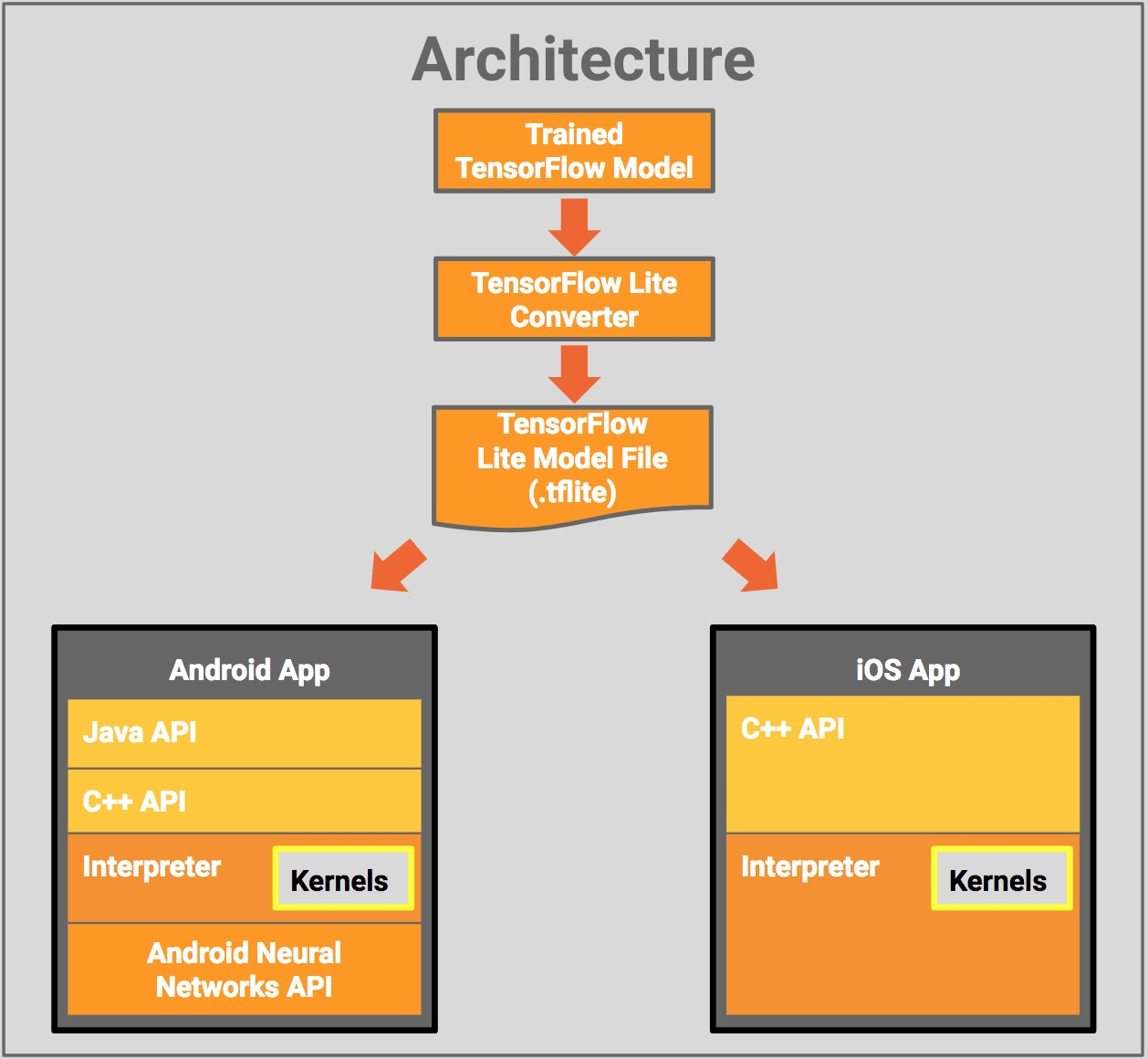

What is TensorFlow Lite?TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It lets you run machine-learned models on mobile devices with low latency, so you can take advantage of them to do classification, regression or anything else you might want without necessarily incurring a round trip to a server.

It’s presentl…

| Introducing TensorFlow Lite — Coding TensorFlow |

compile ‘org.tensorflow:tensorflow-lite:+’import org.tensorflow.lite.Interpreter;protected Interpreter tflite;

tflite = new Interpreter(loadModelFile(activity));/** Memory-map the model file in Assets. */

private MappedByteBuffer loadModelFile(Activity activity) throws IOException {

AssetFileDescriptor fileDescriptor = activity.getAssets().openFd(getModelPath());

FileInputStream inputStream = new FileInputStream(fileDescriptor.getFileDescriptor());

FileChannel fileChannel = inputStream.getChannel();

long startOffset = fileDescriptor.getStartOffset();

long declaredLength = fileDescriptor.getDeclaredLength();

return fileChannel.map(FileChannel.MapMode.READ_ONLY, startOffset, declaredLength);

}tflite.run(imgData, labelProbArray);| TensorFlow Lite for Android — Coding TensorFlow |

> git clone https://www.github.com/tensorflow/tensorflow

/** Classifies a frame from the preview stream. */

private void classifyFrame() {

if (classifier == null || getActivity() == null || cameraDevice == null) {

showToast(“Uninitialized Classifier or invalid context.”)

return;

}

Bitmap bitmap = textureView.getBitmap(

classifier.getImageSizeX(), classifier.getImageSizeY());

String textToShow = classifier.classifyFrame(bitmap);

bitmap.recycle();

showToast(textToShow);

}

mars 30, 2018

—

Posted by Laurence Moroney, Developer Advocate

What is TensorFlow Lite?TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It lets you run machine-learned models on mobile devices with low latency, so you can take advantage of them to do classification, regression or anything else you might want without necessarily incurring a round trip to a server.

It’s presentl…