October 15, 2019 —

A guest post by Sandeep Mistry & Dominic Pajak of the Arduino team

Arduino is on a mission to make Machine Learning simple enough for anyone to use. We’ve been working with the TensorFlow Lite team over the past few months and are excited to show you what we’ve been up to together: bringing TensorFlow Lite Micro to the Arduino Nano 33 BLE Sense. In this article, we’ll show you how to install a…

August 13, 2019 —

A guest post by Kunwoo Park, Moogung Kim, Eunsung Han

August 06, 2019 —

Posted by Eileen Mao and Tanjin Prity, Engineering Practicum Interns at Google, Summer 2019

We are excited to release a TensorFlow Lite sample application for human pose estimation on Android using the PoseNet model. PoseNet is a vision model that estimates the pose of a person in an image or video by detecting the positions of key body parts. As an example, the model can estimate the position of…

August 05, 2019 —

Posted by the TensorFlow team

We are very excited to add post-training float16 quantization as part of the Model Optimization Toolkit. It is a suite of tools that includes hybrid quantization, full integer quantization, and pruning. Check out what else is on the roadmap.

July 12, 2019 —

A guest post by the SmileAR Engineering Team at iQIYI

Introduction: SmileAR is a TensorFlow Lite-based mobile AR solution developed by iQIYI. It has been deployed widely in iQIYI’s many applications, including the iQIYI flagship video app (100+ million DAU), Qibabu (popular app for children), Gingerbread (short video app) and more.

March 14, 2019 —

Posted by Daniel Situnayake (@dansitu), Developer Advocate for TensorFlow Lite.

When you think about the hardware that powers machine learning, you might picture endless rows of power-hungry processors crunching terabytes of data in a distant server farm, or hefty desktop computers stuffed with banks of GPUs.

February 08, 2019 —

A guest article by Prerna Khanna, Tanmay Srivastava and Kanishk Jeet

January 16, 2019 —

Posted by the TensorFlow team

Running inference on compute-heavy machine learning models on mobile devices is resource demanding due to the devices’ limited processing and power. While converting to a fixed-point model is one avenue to acceleration, our users have asked us for GPU support as an option to speed up the inference of the original floating point models without the extra complexity and…

July 13, 2018 —

Posted by Sara Robinson, Aakanksha Chowdhery, and Jonathan Huang

March 30, 2018 —

Posted by Laurence Moroney, Developer Advocate

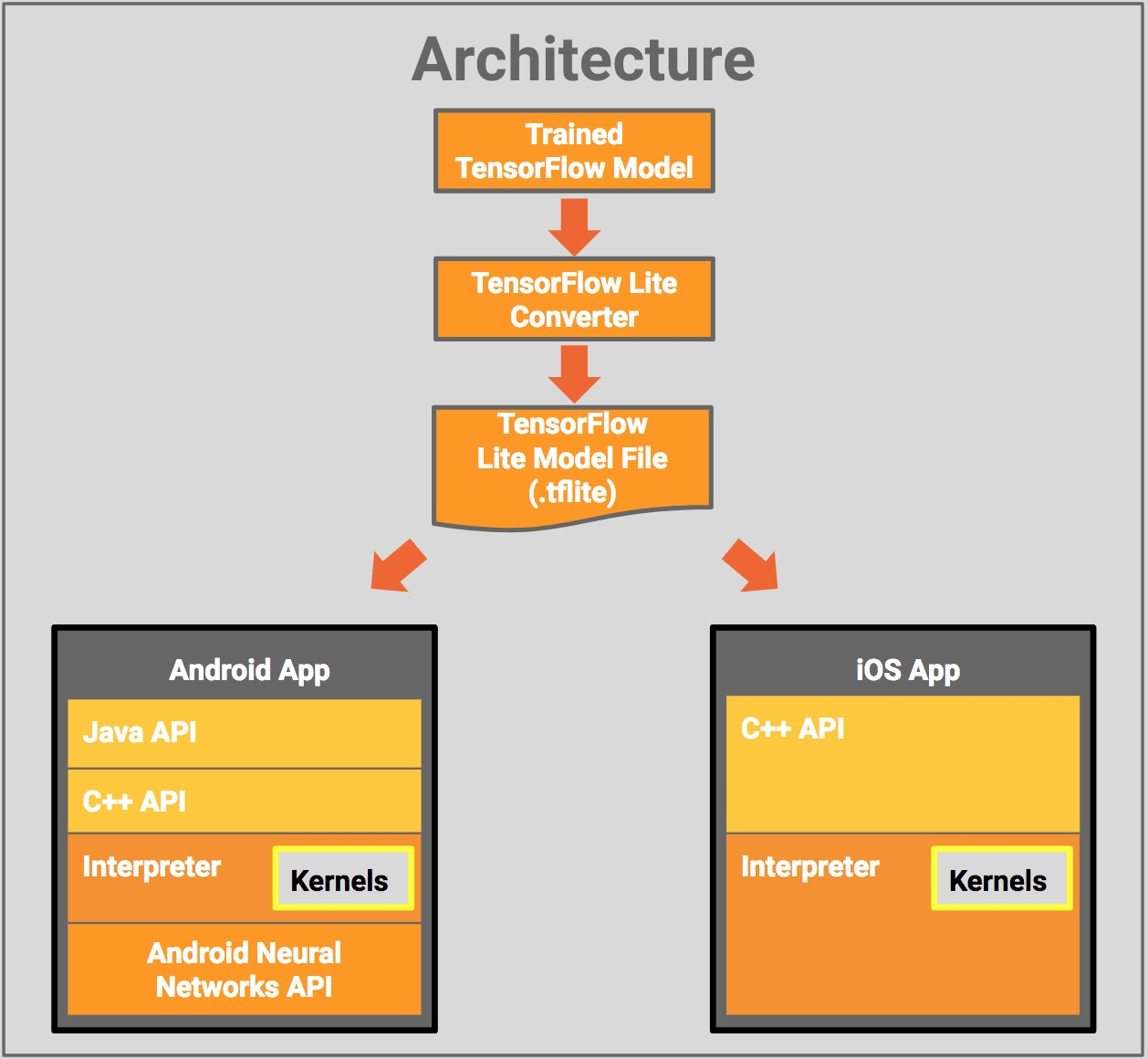

What is TensorFlow Lite?TensorFlow Lite is TensorFlow’s lightweight solution for mobile and embedded devices. It lets you run machine-learned models on mobile devices with low latency, so you can take advantage of them to do classification, regression or anything else you might want without necessarily incurring a round trip to a server.